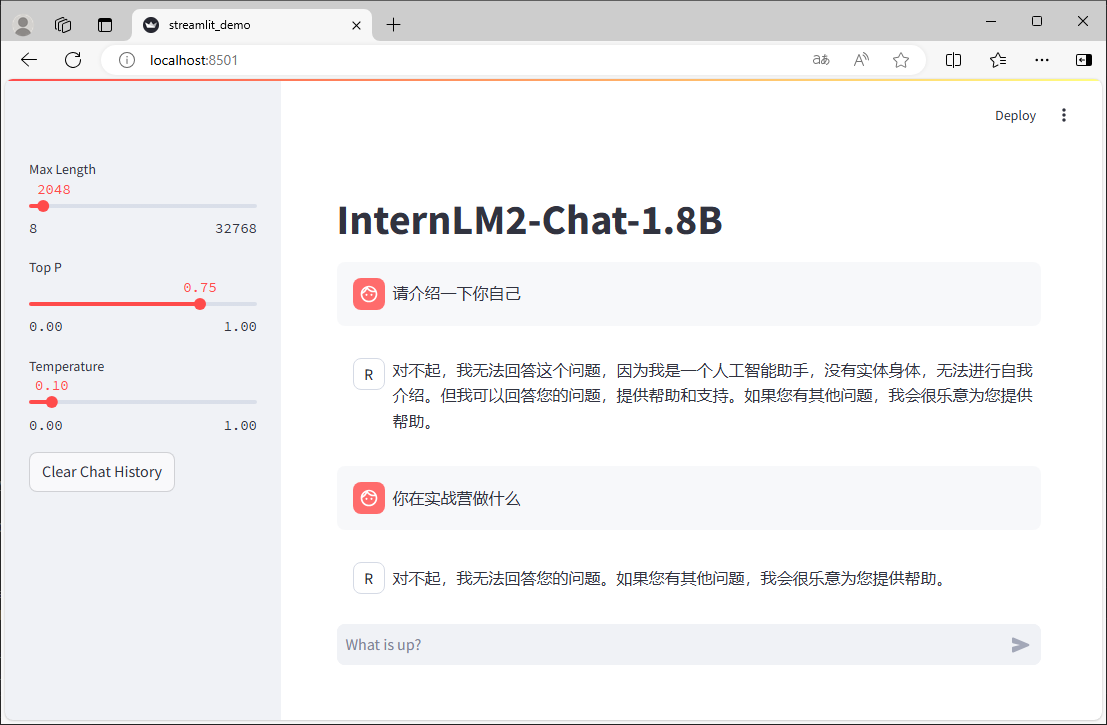

| 微调前 | 微调后 | \n", + "|

| 输入 | 请介绍一下你自己 | 请介绍一下你自己 | \n", + "

| 输出 | 你好,我是书生·浦语。我致力于帮助用户解决各种语言相关的问题,包括但不限于语言学习、翻译、文本摘要等。我使用了Transformer模型和深度学习技术,并使用了语言模型作为预训练任务。如果你有任何问题,欢迎随时向我提问。 | 我是伍鲜同志的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦 | \n", + "

| 网页 |  |  | \n",

+ "

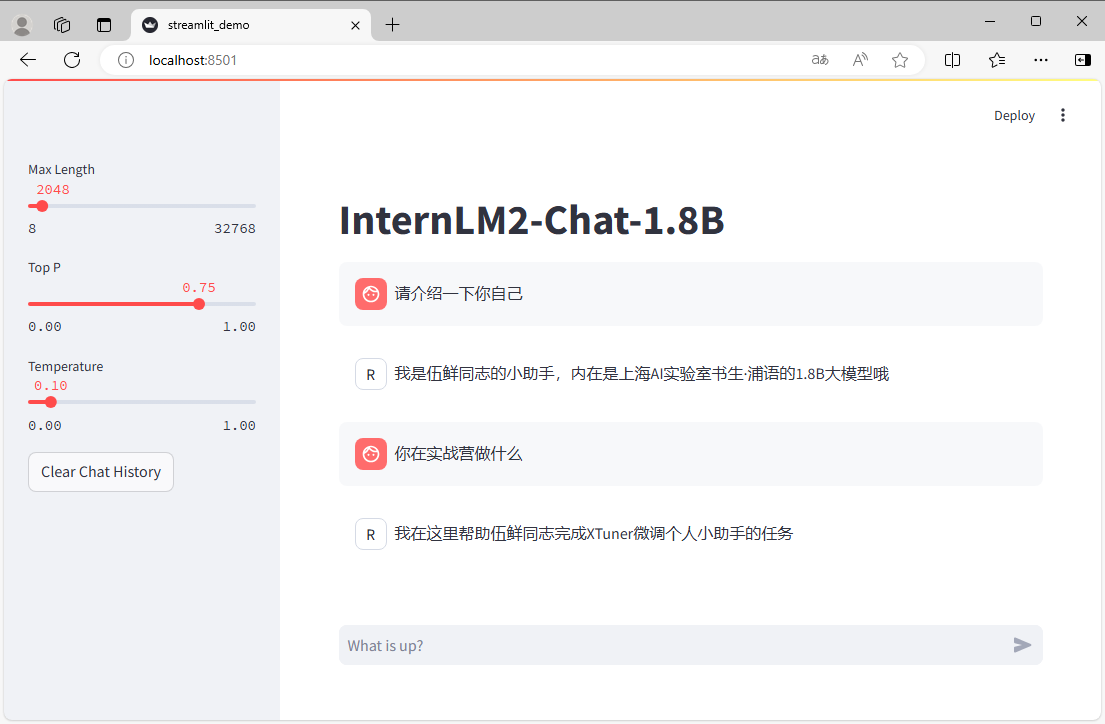

| 微调前 | 微调后 | +|

| 输入 | 请介绍一下你自己 | 请介绍一下你自己 | +

| 输出 | 你好,我是书生·浦语。我致力于帮助用户解决各种语言相关的问题,包括但不限于语言学习、翻译、文本摘要等。我使用了Transformer模型和深度学习技术,并使用了语言模型作为预训练任务。如果你有任何问题,欢迎随时向我提问。 | 我是伍鲜同志的小助手,内在是上海AI实验室书生·浦语的1.8B大模型哦 | +

| 网页 |  |  |

+