- Kubernetes, also known as K8s, is an OPEN-SOURCE system for automating deployment, scaling, and management of containerized applications.

- Kubernates is a battle-tested container orchestration service i.e. manage, create containers (through pods, worker nodes).

Kubernetes is a Greek word meaning

captainin English.

- Like the captain is responsible for the safe journey of the ship in the seas, Kubernetes is responsible for carrying and delivering those boxes safely to locations where they can be used.

| Use Case |

|---|

| Zomato |

| Swiggy |

| Spotify |

| Grab |

| Split.io |

| Stripe |

| Resource | Limit |

|---|---|

| Max Pods Per Node | 110 pods per node |

| Max Pods Per Cluster | 150000 pods per cluster |

| Max Nodes Per Cluster | 5000 nodes per cluster |

| Component | Description |

|---|---|

| Control Plane (Master node) | The control plane manages the worker nodes and the Pods in the cluster. - In production environments, the control plane usually runs across multiple computers and a cluster usually runs multiple nodes, providing fault-tolerance and high availability. - Nodes with controlplane role run the K8s master components (excluding etcd, as its separate role). |

| Worker Nodes | Each docker/Pod container would run the micro-service (golang, java, python service etc.) - And a worker node can have one or multiple pods. - Kubernates would manage the worker nodes i.e. Create, Update, Delete, Auto-Scale based on the configuration and params. |

| Pods | Pods are the smallest deployable units of computing that you can create and manage in Kubernetes. - A Pod (as in a pod of whales or pea pod) is a group of one or more containers, with shared storage and network resources, and a specification for how to run the containers. - A pod can contain one or multiple containers (but generally one container). - Minimum and Maximum Memory Constraints for a Namespace needs to be configured for the pod |

| Networking | K8s manages its own load balancer, service discovery (through etcd) etc. Read more |

| Cluster | A Kubernetes cluster consists of a set of worker machines, called nodes, that run containerized applications. - Every cluster has at least one worker node. |

| Agents | Kubernetes agents perform various tasks on every node to manage the containers running on that node. For example: - cAdvisor collects and analyzes the resource usage of all containers on a node. - kubelet runs regular live-ness and readiness probes against each container on a node. |

| Labels | Labels are key/value pairs that are attached to objects, such as pods. - Labels are intended to be used to specify identifying attributes of objects that are meaningful and relevant to users, but do not directly imply semantics to the core system. |

| K8s Master Components | Description |

|---|---|

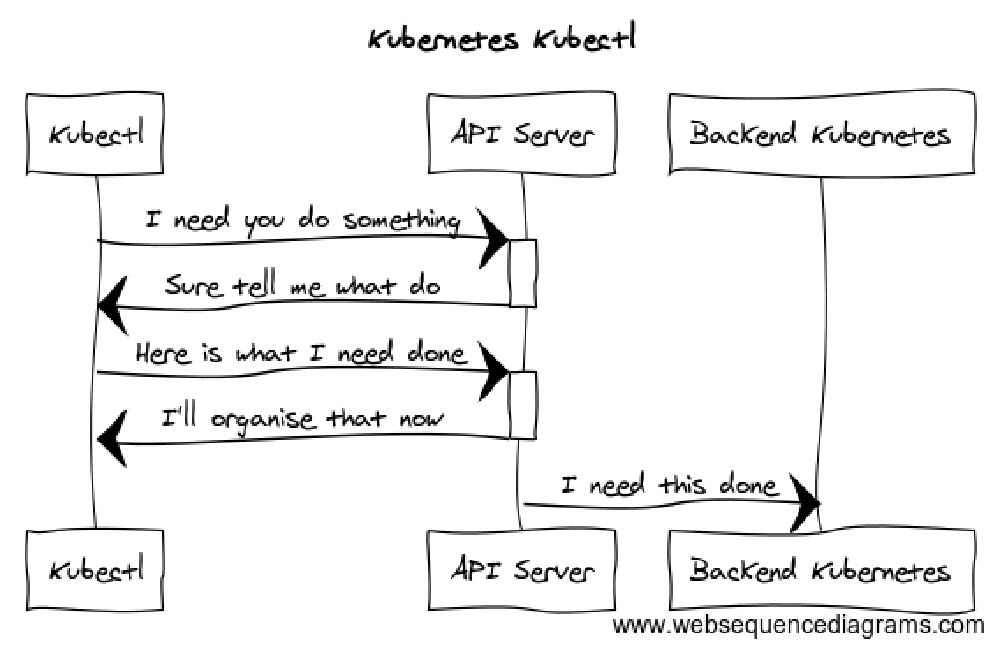

| API server | The Kubernetes API server validates and configures data for the api objects which include pods, services, replication controllers, and others. - The API Server services REST operations and provides the frontend to the cluster's shared state through which all other components interact. |

| etcd | etcd is used for Configuration Store & Service Discovery. - etcd is a Consistent and highly-available key value store used as Kubernetes backing store for all cluster data. |

| Controller manager | The K8s Controller Manager is a daemon that embeds the core control loops (like ReplicationController, DeploymentController etc.) shipped with Kubernetes. |

| Scheduler | A Scheduler is responsible for scheduling pods on the cluster. - It watches for newly created Pods that have no Node assigned. - For every Pod that the scheduler discovers, the scheduler becomes responsible for finding the best Node for that Pod to run on. |

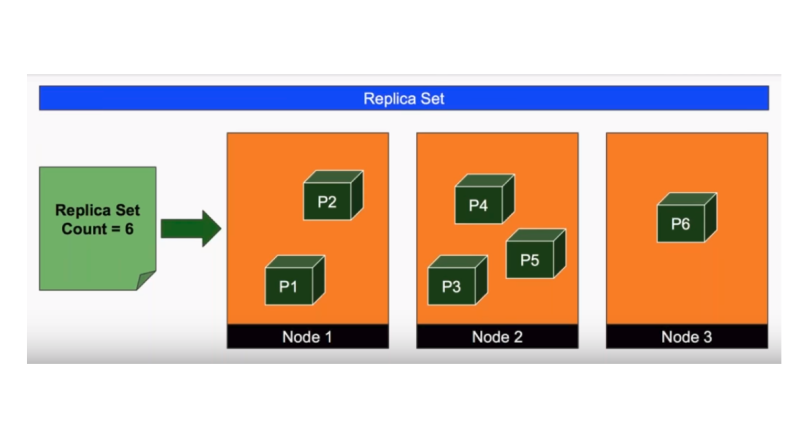

| ReplicationController | A ReplicationController ensures that a specified number of pod replicas are running at any one time. In other words, a ReplicationController makes sure that a pod or a homogeneous set of pods is always up and available. |

| Deployment Controller | Deployment Controller manages the rolling update and rollback of deployments (docker containers etc.) |

- A Deployment provides declarative updates for Pods and ReplicaSets.

- You describe a desired state in a Deployment, and the Deployment Controller changes the actual state to the desired state at a controlled rate.

- You can define Deployments to create new ReplicaSets, or to remove existing Deployments and adopt all their resources with new Deployments.

- Every microservice, app component can be a deployment in K8s.

- ReplicasSet will ensure that the number of pods (defined in our config file) is always running in our cluster.

- Does not matter in which worker node they are running.

- The scheduler will schedule the pods on any node depending upon the free resources.

- If one of our nodes goes down then all pods running on the node will be randomly scheduled on different nodes as per the resource availability.

- In this way, ReplicaSet ensures that the number of pods of an application is running on the correct scale as specified in the conf file.

- Example - MicroServices, App Pods etc.

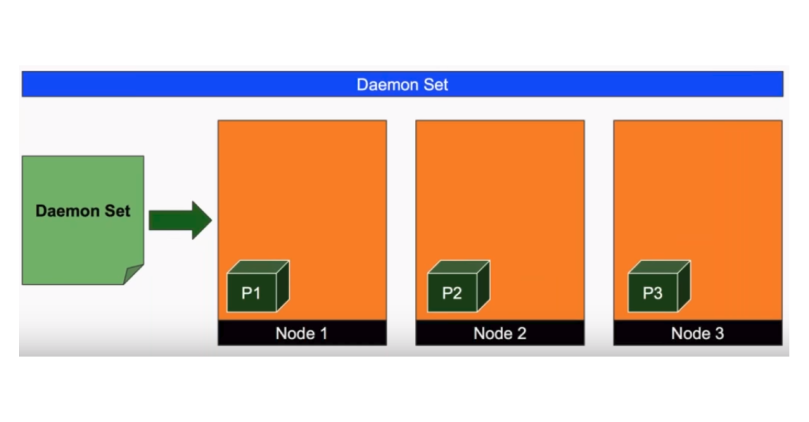

- Whereas in the case of DaemonSet, it will ensure that one copy of pod defined in our configuration will always be available on every worker node.

- Example - newrelic-infra, newrelic-logging etc.

- StatefulSets are used when state has to be persisted.

- Therefore, it uses volumeClaimTemplates / claims on persistent volumes to ensure they can keep the state across component restarts.

- Example - kube-state-metrics etc.

- In Kubernetes, a Horizontal Pod Autoscaler automatically updates a workload resource (such as a Deployment or StatefulSet), with the aim of automatically scaling the workload to match demand.

- As an alternative, we can also use Cluster Autoscaler for scaling pods.

type: ContainerResource

containerResource:

name: cpu

container: application

target:

type: Utilization

averageUtilization: 60behavior:

scaleDown:

stabilizationWindowSeconds: 300

policies:

- type: Percent

value: 100

periodSeconds: 15

scaleUp:

stabilizationWindowSeconds: 0

policies:

- type: Percent

value: 100

periodSeconds: 15

- type: Pods

value: 4

periodSeconds: 15

selectPolicy: Max- KOPS offers a one-stop solution for deploying Kubernetes cluster with Amazon Web Services.

- It is an open source tool designed to make installation of secure, highly available clusters easy and automatable.

- We require a hosted zone associated with Route 53 which must be publicly resolvable.

aws route53 create-hosted-zone --name testikod.in --caller-reference 2017-02-24-11:12 --hosted-zone-config Comment="Hosted Zone for KOPS"- KOPS internally uses Terraform.

- So we required external state store for storing states of a cluster.

- We are using Amazon S3 for storing state.

aws s3api create-bucket --bucket testikod-in-state-store --region us-west-2- An instance group is a set of instances, which will be registered as kubernetes nodes. On AWS this is implemented via auto-scaling-groups.

export NAME=cluster.testikod.in // Setup environment variable for STATE STORE and cluster name.

export KOPS_STATE_STORE="s3://testikod-in-state-store"

kops create cluster \

--cloud aws \

--node-count 5 \

--node-size t2.medium \

--master-size m3.medium \

--zones us-west-2a,us-west-2b,us-west-2c \

--master-zones us-west-2a,us-west-2b,us-west-2c \

--dns-zone testikod.in \

--topology private \

--networking calico \

--bastion \

${NAME}Parameters:

- --cloud aws : We are launching cluster in AWS.

- --zones us-west-2a,us-west-2b,us-west-2c : This describes availability zones for nodes.

- --node-count 5 : The number kubernetes nodes.

- --node-size t2.medium : Size of kubernetes nodes.

- --dns-zone testikod.in : Hosted zone which we created earlier.

- --master-size m3.medium : Size of a Kubernetes master node.

- --master-zones us-west-2a,us-west-2b,us-west-2c : This will tell kops to spread masters across those availability zones. Which will give High Availability to KOPS cluster.

- --topology private : We define that we want to use a private network topology with kops.

- --networking calico : We tell kops to use Calico for our overlay network. Overlay networks are required for this configuration.

- -- bastion : Add this flag to tell kops to create a bastion server, so you can SSH into the cluster.

Read more

- Installing Kubernetes with kOps

- Creating Kubernetes Clusters on AWS using KOPS

- An introduction and setting up kubernetes cluster on AWS using KOPS

- Kubectl is used for communicating with the cluster API server.

| Title | Command | Remarks |

|---|---|---|

| View config | kubectl config view | |

| Set context in config | kubectl config use-context | |

| Get all contexts | kubectl config get-contexts | |

| Get all the events of the cluster | kubectl get events | List Events sorted by timestamp |

| Get all the deployments list | kubectl get deployments | |

| Scale the deployment | kubectl scale --replicas=10 <deployment_name> | Replicate the deployment (microservice) across the worker-nodes |

| Auto scale the deployment | kubectl autoscale deployment foo --min=2 --max=10 | Auto scale a deployment "foo" |

| Get all pods | kubectl get pods --all-namespaces | List all pods in the namespace, in the default context |

| Get pod information | kubectl get pod my-pod -o yaml | Get a pod's YAML |

| Create resource | kubectl apply -f ./my-manifest.yaml | Create resource (pod etc.) from yaml file - apply manages applications through files defining Kubernetes resources. - It creates and updates resources in a cluster through running kubectl apply. |

| Update resource | kubectl patch | Use kubectl patch to update an API object in place. |

| Dump pod logs | kubectl logs my-pod | dump pod logs (stdout) |

- Self-healing

- Automated Rollbacks

- Horizontal Scaling

- It helps us deploy and manage applications in consistent and reliable way regardless of underlying architecture.

- Complex to set up and operate

- High cost to run minimum resources for K8s

- You can use kubectl drain to safely evict all of your pods from a node before you perform maintenance on the node (e.g. kernel upgrade, hardware maintenance, etc.)

- Safe evictions allow the pod's containers to gracefully terminate and will respect the PodDisruptionBudgets you have specified.

| Tool | Description |

|---|---|

| Kubeadm | Kubeadm is a tool used to build Kubernetes (K8s) clusters. Kubeadm performs the actions necessary to get a minimum viable cluster up and running quickly. |

| Helm | Helm is the best way to find, share, and use software built for Kubernetes. |

| K9s | K9s is a terminal based UI to interact with your Kubernetes clusters. K9s continually watches Kubernetes for changes and offers subsequent commands to interact with your observed resources. |

| Knative | Knative is an Open-Source Enterprise-level solution to build Serverless and Event Driven Applications in K8s environment. |

| Kustomize | Kustomize introduces a template-free way to customize application configuration that simplifies the use of off-the-shelf applications. |

| Rancher | Rancher lets you deliver Kubernetes-as-a-Service. |

| OpenFaaS | OpenFaaS makes it simple to deploy both functions and existing code to Kubernetes |

| Argo CD | Argo CD is a declarative, GitOps continuous delivery tool for Kubernetes. |

| Flagger (Canary Deployment) | Flagger takes a Kubernetes deployment and optionally a horizontal pod autoscaler (HPA), then creates a series of objects (Kubernetes deployments, ClusterIP services and canary ingress). These objects expose the application outside the cluster and drive the canary analysis and promotion. |

| Karpenter | Karpenter manages Just-in-time Nodes for Any Kubernetes Cluster. |

| Kyverno | Kyverno is a policy engine designed for Kubernetes. |

- How to Manage Kubernetes With Kubectl?

- Mesos vs Kubernetes

- Choosing an Optimal Kubernetes Worker Node Size for Your Startup

- Architecting Kubernetes clusters — choosing the best autoscaling strategy

- Setting the right requests and limits in Kubernetes

- Canary deployment in Kubernetes: how to use the pattern