Visual question answering

- -

Transformers acts as the model-definition framework for state-of-the-art machine learning models in text, computer

vision, audio, video, and multimodal model, for both inference and training.

@@ -35,6 +34,10 @@ There are over 1M+ Transformers [model checkpoints](https://huggingface.co/model

Explore the [Hub](https://huggingface.com/) today to find a model and use Transformers to help you get started right away.

+Explore the [Models Timeline](./models_timeline) to discover the latest text, vision, audio and multimodal model architectures in Transformers.

+

+

+

## Features

Transformers provides everything you need for inference or training with state-of-the-art pretrained models. Some of the main features include:

@@ -61,4 +64,4 @@ Transformers is designed for developers and machine learning engineers and resea

## Learn

-If you're new to Transformers or want to learn more about transformer models, we recommend starting with the [LLM course](https://huggingface.co/learn/llm-course/chapter1/1?fw=pt). This comprehensive course covers everything from the fundamentals of how transformer models work to practical applications across various tasks. You'll learn the complete workflow, from curating high-quality datasets to fine-tuning large language models and implementing reasoning capabilities. The course contains both theoretical and hands-on exercises to build a solid foundational knowledge of transformer models as you learn.

\ No newline at end of file

+If you're new to Transformers or want to learn more about transformer models, we recommend starting with the [LLM course](https://huggingface.co/learn/llm-course/chapter1/1?fw=pt). This comprehensive course covers everything from the fundamentals of how transformer models work to practical applications across various tasks. You'll learn the complete workflow, from curating high-quality datasets to fine-tuning large language models and implementing reasoning capabilities. The course contains both theoretical and hands-on exercises to build a solid foundational knowledge of transformer models as you learn.

diff --git a/docs/source/en/internal/file_utils.md b/docs/source/en/internal/file_utils.md

index 31fbc5b88110..63db5756a622 100644

--- a/docs/source/en/internal/file_utils.md

+++ b/docs/source/en/internal/file_utils.md

@@ -20,7 +20,6 @@ This page lists all of Transformers general utility functions that are found in

Most of those are only useful if you are studying the general code in the library.

-

## Enums and namedtuples

[[autodoc]] utils.ExplicitEnum

diff --git a/docs/source/en/internal/generation_utils.md b/docs/source/en/internal/generation_utils.md

index d47eba82d8cc..87b0111ff053 100644

--- a/docs/source/en/internal/generation_utils.md

+++ b/docs/source/en/internal/generation_utils.md

@@ -65,7 +65,6 @@ values. Here, for instance, it has two keys that are `sequences` and `scores`.

We document here all output types.

-

[[autodoc]] generation.GenerateDecoderOnlyOutput

[[autodoc]] generation.GenerateEncoderDecoderOutput

@@ -74,13 +73,11 @@ We document here all output types.

[[autodoc]] generation.GenerateBeamEncoderDecoderOutput

-

## LogitsProcessor

A [`LogitsProcessor`] can be used to modify the prediction scores of a language model head for

generation.

-

[[autodoc]] AlternatingCodebooksLogitsProcessor

- __call__

@@ -174,8 +171,6 @@ generation.

[[autodoc]] WatermarkLogitsProcessor

- __call__

-

-

## StoppingCriteria

A [`StoppingCriteria`] can be used to change when to stop generation (other than EOS token). Please note that this is exclusively available to our PyTorch implementations.

@@ -300,7 +295,6 @@ A [`Constraint`] can be used to force the generation to include specific tokens

- to_legacy_cache

- from_legacy_cache

-

## Watermark Utils

[[autodoc]] WatermarkingConfig

diff --git a/docs/source/en/internal/import_utils.md b/docs/source/en/internal/import_utils.md

index 0d76c2bbe33a..4a9915378a1f 100644

--- a/docs/source/en/internal/import_utils.md

+++ b/docs/source/en/internal/import_utils.md

@@ -22,8 +22,8 @@ worked around. We don't want for all users of `transformers` to have to install

we therefore mark those as soft dependencies rather than hard dependencies.

The transformers toolkit is not made to error-out on import of a model that has a specific dependency; instead, an

-object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

-`torchvision` isn't installed, the fast image processors will not be available.

+object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

+`torchvision` isn't installed, the fast image processors will not be available.

This object is still importable:

@@ -60,7 +60,7 @@ PyTorch dependency

**Tokenizers**: All files starting with `tokenization_` and ending with `_fast` have an automatic `tokenizers` dependency

-**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

+**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

at the time of writing, this only contains the `pillow` dependency.

**Vision + Torch + Torchvision**: All files starting with `image_processing_` and ending with `_fast` have an automatic

@@ -71,7 +71,7 @@ All of these automatic dependencies are added on top of the explicit dependencie

### Explicit Object Dependencies

We add a method called `requires` that is used to explicitly specify the dependencies of a given object. As an

-example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

+example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

required dependencies:

```python

diff --git a/docs/source/en/internal/model_debugging_utils.md b/docs/source/en/internal/model_debugging_utils.md

index 262113575f42..553a5ce56845 100644

--- a/docs/source/en/internal/model_debugging_utils.md

+++ b/docs/source/en/internal/model_debugging_utils.md

@@ -21,10 +21,8 @@ provides for it.

Most of those are only useful if you are adding new models in the library.

-

## Model addition debuggers

-

### Model addition debugger - context manager for model adders

This context manager is a power user tool intended for model adders. It tracks all forward calls within a model forward

@@ -72,7 +70,6 @@ with model_addition_debugger_context(

```

-

### Reading results

The debugger generates two files from the forward call, both with the same base name, but ending either with

@@ -221,9 +218,9 @@ path reference to the associated `.safetensors` file. Each tensor is written to

the state dictionary. File names are constructed using the `module_path` as a prefix with a few possible postfixes that

are built recursively.

-* Module inputs are denoted with the `_inputs` and outputs by `_outputs`.

-* `list` and `tuple` instances, such as `args` or function return values, will be postfixed with `_{index}`.

-* `dict` instances will be postfixed with `_{key}`.

+* Module inputs are denoted with the `_inputs` and outputs by `_outputs`.

+* `list` and `tuple` instances, such as `args` or function return values, will be postfixed with `_{index}`.

+* `dict` instances will be postfixed with `_{key}`.

### Comparing between implementations

@@ -231,10 +228,8 @@ Once the forward passes of two models have been traced by the debugger, one can

below: we can see slight differences between these two implementations' key projection layer. Inputs are mostly

identical, but not quite. Looking through the file differences makes it easier to pinpoint which layer is wrong.

-

-

### Limitations and scope

This feature will only work for torch-based models, and would require more work and case-by-case approach for say

@@ -254,13 +249,14 @@ layers.

This small util is a power user tool intended for model adders and maintainers. It lists all test methods

existing in `test_modeling_common.py`, inherited by all model tester classes, and scans the repository to measure

-how many tests are being skipped and for which models.

+how many tests are being skipped and for which models.

### Rationale

When porting models to transformers, tests fail as they should, and sometimes `test_modeling_common` feels irreconcilable with the peculiarities of our brand new model. But how can we be sure we're not breaking everything by adding a seemingly innocent skip?

This utility:

+

- scans all test_modeling_common methods

- looks for times where a method is skipped

- returns a summary json you can load as a DataFrame/inspect

@@ -269,8 +265,7 @@ This utility:

-

-### Usage

+### Usage

You can run the skipped test analyzer in two ways:

@@ -286,7 +281,7 @@ python utils/scan_skipped_tests.py --output_dir path/to/output

**Example output:**

-```

+```text

🔬 Parsing 331 model test files once each...

📝 Aggregating 224 tests...

(224/224) test_update_candidate_strategy_with_matches_1es_3d_is_nonecodet_schedule_fa_kwargs

diff --git a/docs/source/en/internal/pipelines_utils.md b/docs/source/en/internal/pipelines_utils.md

index 6ea6de9a61b8..23856e5639c3 100644

--- a/docs/source/en/internal/pipelines_utils.md

+++ b/docs/source/en/internal/pipelines_utils.md

@@ -20,7 +20,6 @@ This page lists all the utility functions the library provides for pipelines.

Most of those are only useful if you are studying the code of the models in the library.

-

## Argument handling

[[autodoc]] pipelines.ArgumentHandler

diff --git a/docs/source/en/jan.md b/docs/source/en/jan.md

index ff580496c81b..95309f46cd04 100644

--- a/docs/source/en/jan.md

+++ b/docs/source/en/jan.md

@@ -25,7 +25,7 @@ You are now ready to chat!

To conclude this example, let's look into a more advanced use-case. If you have a beefy machine to serve models with, but prefer using Jan on a different device, you need to add port forwarding. If you have `ssh` access from your Jan machine into your server, this can be accomplished by typing the following to your Jan machine's terminal

-```

+```bash

ssh -N -f -L 8000:localhost:8000 your_server_account@your_server_IP -p port_to_ssh_into_your_server

```

diff --git a/docs/source/en/kv_cache.md b/docs/source/en/kv_cache.md

index f0a781cba4fc..f318c73d28a9 100644

--- a/docs/source/en/kv_cache.md

+++ b/docs/source/en/kv_cache.md

@@ -67,7 +67,7 @@ out = model.generate(**inputs, do_sample=False, max_new_tokens=20, past_key_valu

## Fixed-size cache

-The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

+The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

A fixed-size cache ([`StaticCache`]) pre-allocates a specific maximum cache size for the kv pairs. You can generate up to the maximum cache size without needing to modify it. However, having a fixed (usually large) size for the key/value states means that while generating, a lot of tokens will actually be masked as they should not take part in the attention. So this trick allows to easily `compile` the decoding stage, but it incurs a waste of tokens in the attention computation. As all things, it's then a trade-off which should be very good if you generate with several sequence of more or less the same lengths, but may be sub-optimal if you have for example 1 very large sequence, and then only short sequences (as the fix cache size would be large, a lot would be wasted for the short sequences). Make sure you understand the impact if you use it!

@@ -213,7 +213,7 @@ A cache can also work in iterative generation settings where there is back-and-f

For iterative generation with a cache, start by initializing an empty cache class and then you can feed in your new prompts. Keep track of dialogue history with a [chat template](./chat_templating).

-The following example demonstrates [Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf). If you’re using a different chat-style model, [`~PreTrainedTokenizer.apply_chat_template`] may process messages differently. It might cut out important tokens depending on how the Jinja template is written.

+The following example demonstrates [Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf). If you're using a different chat-style model, [`~PreTrainedTokenizer.apply_chat_template`] may process messages differently. It might cut out important tokens depending on how the Jinja template is written.

For example, some models use special `

-

Transformers acts as the model-definition framework for state-of-the-art machine learning models in text, computer

vision, audio, video, and multimodal model, for both inference and training.

@@ -35,6 +34,10 @@ There are over 1M+ Transformers [model checkpoints](https://huggingface.co/model

Explore the [Hub](https://huggingface.com/) today to find a model and use Transformers to help you get started right away.

+Explore the [Models Timeline](./models_timeline) to discover the latest text, vision, audio and multimodal model architectures in Transformers.

+

+

+

## Features

Transformers provides everything you need for inference or training with state-of-the-art pretrained models. Some of the main features include:

@@ -61,4 +64,4 @@ Transformers is designed for developers and machine learning engineers and resea

## Learn

-If you're new to Transformers or want to learn more about transformer models, we recommend starting with the [LLM course](https://huggingface.co/learn/llm-course/chapter1/1?fw=pt). This comprehensive course covers everything from the fundamentals of how transformer models work to practical applications across various tasks. You'll learn the complete workflow, from curating high-quality datasets to fine-tuning large language models and implementing reasoning capabilities. The course contains both theoretical and hands-on exercises to build a solid foundational knowledge of transformer models as you learn.

\ No newline at end of file

+If you're new to Transformers or want to learn more about transformer models, we recommend starting with the [LLM course](https://huggingface.co/learn/llm-course/chapter1/1?fw=pt). This comprehensive course covers everything from the fundamentals of how transformer models work to practical applications across various tasks. You'll learn the complete workflow, from curating high-quality datasets to fine-tuning large language models and implementing reasoning capabilities. The course contains both theoretical and hands-on exercises to build a solid foundational knowledge of transformer models as you learn.

diff --git a/docs/source/en/internal/file_utils.md b/docs/source/en/internal/file_utils.md

index 31fbc5b88110..63db5756a622 100644

--- a/docs/source/en/internal/file_utils.md

+++ b/docs/source/en/internal/file_utils.md

@@ -20,7 +20,6 @@ This page lists all of Transformers general utility functions that are found in

Most of those are only useful if you are studying the general code in the library.

-

## Enums and namedtuples

[[autodoc]] utils.ExplicitEnum

diff --git a/docs/source/en/internal/generation_utils.md b/docs/source/en/internal/generation_utils.md

index d47eba82d8cc..87b0111ff053 100644

--- a/docs/source/en/internal/generation_utils.md

+++ b/docs/source/en/internal/generation_utils.md

@@ -65,7 +65,6 @@ values. Here, for instance, it has two keys that are `sequences` and `scores`.

We document here all output types.

-

[[autodoc]] generation.GenerateDecoderOnlyOutput

[[autodoc]] generation.GenerateEncoderDecoderOutput

@@ -74,13 +73,11 @@ We document here all output types.

[[autodoc]] generation.GenerateBeamEncoderDecoderOutput

-

## LogitsProcessor

A [`LogitsProcessor`] can be used to modify the prediction scores of a language model head for

generation.

-

[[autodoc]] AlternatingCodebooksLogitsProcessor

- __call__

@@ -174,8 +171,6 @@ generation.

[[autodoc]] WatermarkLogitsProcessor

- __call__

-

-

## StoppingCriteria

A [`StoppingCriteria`] can be used to change when to stop generation (other than EOS token). Please note that this is exclusively available to our PyTorch implementations.

@@ -300,7 +295,6 @@ A [`Constraint`] can be used to force the generation to include specific tokens

- to_legacy_cache

- from_legacy_cache

-

## Watermark Utils

[[autodoc]] WatermarkingConfig

diff --git a/docs/source/en/internal/import_utils.md b/docs/source/en/internal/import_utils.md

index 0d76c2bbe33a..4a9915378a1f 100644

--- a/docs/source/en/internal/import_utils.md

+++ b/docs/source/en/internal/import_utils.md

@@ -22,8 +22,8 @@ worked around. We don't want for all users of `transformers` to have to install

we therefore mark those as soft dependencies rather than hard dependencies.

The transformers toolkit is not made to error-out on import of a model that has a specific dependency; instead, an

-object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

-`torchvision` isn't installed, the fast image processors will not be available.

+object for which you are lacking a dependency will error-out when calling any method on it. As an example, if

+`torchvision` isn't installed, the fast image processors will not be available.

This object is still importable:

@@ -60,7 +60,7 @@ PyTorch dependency

**Tokenizers**: All files starting with `tokenization_` and ending with `_fast` have an automatic `tokenizers` dependency

-**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

+**Vision**: All files starting with `image_processing_` have an automatic dependency to the `vision` dependency group;

at the time of writing, this only contains the `pillow` dependency.

**Vision + Torch + Torchvision**: All files starting with `image_processing_` and ending with `_fast` have an automatic

@@ -71,7 +71,7 @@ All of these automatic dependencies are added on top of the explicit dependencie

### Explicit Object Dependencies

We add a method called `requires` that is used to explicitly specify the dependencies of a given object. As an

-example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

+example, the `Trainer` class has two hard dependencies: `torch` and `accelerate`. Here is how we specify these

required dependencies:

```python

diff --git a/docs/source/en/internal/model_debugging_utils.md b/docs/source/en/internal/model_debugging_utils.md

index 262113575f42..553a5ce56845 100644

--- a/docs/source/en/internal/model_debugging_utils.md

+++ b/docs/source/en/internal/model_debugging_utils.md

@@ -21,10 +21,8 @@ provides for it.

Most of those are only useful if you are adding new models in the library.

-

## Model addition debuggers

-

### Model addition debugger - context manager for model adders

This context manager is a power user tool intended for model adders. It tracks all forward calls within a model forward

@@ -72,7 +70,6 @@ with model_addition_debugger_context(

```

-

### Reading results

The debugger generates two files from the forward call, both with the same base name, but ending either with

@@ -221,9 +218,9 @@ path reference to the associated `.safetensors` file. Each tensor is written to

the state dictionary. File names are constructed using the `module_path` as a prefix with a few possible postfixes that

are built recursively.

-* Module inputs are denoted with the `_inputs` and outputs by `_outputs`.

-* `list` and `tuple` instances, such as `args` or function return values, will be postfixed with `_{index}`.

-* `dict` instances will be postfixed with `_{key}`.

+* Module inputs are denoted with the `_inputs` and outputs by `_outputs`.

+* `list` and `tuple` instances, such as `args` or function return values, will be postfixed with `_{index}`.

+* `dict` instances will be postfixed with `_{key}`.

### Comparing between implementations

@@ -231,10 +228,8 @@ Once the forward passes of two models have been traced by the debugger, one can

below: we can see slight differences between these two implementations' key projection layer. Inputs are mostly

identical, but not quite. Looking through the file differences makes it easier to pinpoint which layer is wrong.

-

-

### Limitations and scope

This feature will only work for torch-based models, and would require more work and case-by-case approach for say

@@ -254,13 +249,14 @@ layers.

This small util is a power user tool intended for model adders and maintainers. It lists all test methods

existing in `test_modeling_common.py`, inherited by all model tester classes, and scans the repository to measure

-how many tests are being skipped and for which models.

+how many tests are being skipped and for which models.

### Rationale

When porting models to transformers, tests fail as they should, and sometimes `test_modeling_common` feels irreconcilable with the peculiarities of our brand new model. But how can we be sure we're not breaking everything by adding a seemingly innocent skip?

This utility:

+

- scans all test_modeling_common methods

- looks for times where a method is skipped

- returns a summary json you can load as a DataFrame/inspect

@@ -269,8 +265,7 @@ This utility:

-

-### Usage

+### Usage

You can run the skipped test analyzer in two ways:

@@ -286,7 +281,7 @@ python utils/scan_skipped_tests.py --output_dir path/to/output

**Example output:**

-```

+```text

🔬 Parsing 331 model test files once each...

📝 Aggregating 224 tests...

(224/224) test_update_candidate_strategy_with_matches_1es_3d_is_nonecodet_schedule_fa_kwargs

diff --git a/docs/source/en/internal/pipelines_utils.md b/docs/source/en/internal/pipelines_utils.md

index 6ea6de9a61b8..23856e5639c3 100644

--- a/docs/source/en/internal/pipelines_utils.md

+++ b/docs/source/en/internal/pipelines_utils.md

@@ -20,7 +20,6 @@ This page lists all the utility functions the library provides for pipelines.

Most of those are only useful if you are studying the code of the models in the library.

-

## Argument handling

[[autodoc]] pipelines.ArgumentHandler

diff --git a/docs/source/en/jan.md b/docs/source/en/jan.md

index ff580496c81b..95309f46cd04 100644

--- a/docs/source/en/jan.md

+++ b/docs/source/en/jan.md

@@ -25,7 +25,7 @@ You are now ready to chat!

To conclude this example, let's look into a more advanced use-case. If you have a beefy machine to serve models with, but prefer using Jan on a different device, you need to add port forwarding. If you have `ssh` access from your Jan machine into your server, this can be accomplished by typing the following to your Jan machine's terminal

-```

+```bash

ssh -N -f -L 8000:localhost:8000 your_server_account@your_server_IP -p port_to_ssh_into_your_server

```

diff --git a/docs/source/en/kv_cache.md b/docs/source/en/kv_cache.md

index f0a781cba4fc..f318c73d28a9 100644

--- a/docs/source/en/kv_cache.md

+++ b/docs/source/en/kv_cache.md

@@ -67,7 +67,7 @@ out = model.generate(**inputs, do_sample=False, max_new_tokens=20, past_key_valu

## Fixed-size cache

-The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

+The default [`DynamicCache`] prevents you from taking advantage of most just-in-time (JIT) optimizations because the cache size isn't fixed. JIT optimizations enable you to maximize latency at the expense of memory usage. All of the following cache types are compatible with JIT optimizations like [torch.compile](./llm_optims#static-kv-cache-and-torchcompile) to accelerate generation.

A fixed-size cache ([`StaticCache`]) pre-allocates a specific maximum cache size for the kv pairs. You can generate up to the maximum cache size without needing to modify it. However, having a fixed (usually large) size for the key/value states means that while generating, a lot of tokens will actually be masked as they should not take part in the attention. So this trick allows to easily `compile` the decoding stage, but it incurs a waste of tokens in the attention computation. As all things, it's then a trade-off which should be very good if you generate with several sequence of more or less the same lengths, but may be sub-optimal if you have for example 1 very large sequence, and then only short sequences (as the fix cache size would be large, a lot would be wasted for the short sequences). Make sure you understand the impact if you use it!

@@ -213,7 +213,7 @@ A cache can also work in iterative generation settings where there is back-and-f

For iterative generation with a cache, start by initializing an empty cache class and then you can feed in your new prompts. Keep track of dialogue history with a [chat template](./chat_templating).

-The following example demonstrates [Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf). If you’re using a different chat-style model, [`~PreTrainedTokenizer.apply_chat_template`] may process messages differently. It might cut out important tokens depending on how the Jinja template is written.

+The following example demonstrates [Llama-2-7b-chat-hf](https://huggingface.co/meta-llama/Llama-2-7b-chat-hf). If you're using a different chat-style model, [`~PreTrainedTokenizer.apply_chat_template`] may process messages differently. It might cut out important tokens depending on how the Jinja template is written.

For example, some models use special ` @@ -24,7 +23,7 @@ rendered properly in your Markdown viewer.

@@ -24,7 +23,7 @@ rendered properly in your Markdown viewer.

+alt="drawing" width="600"/>

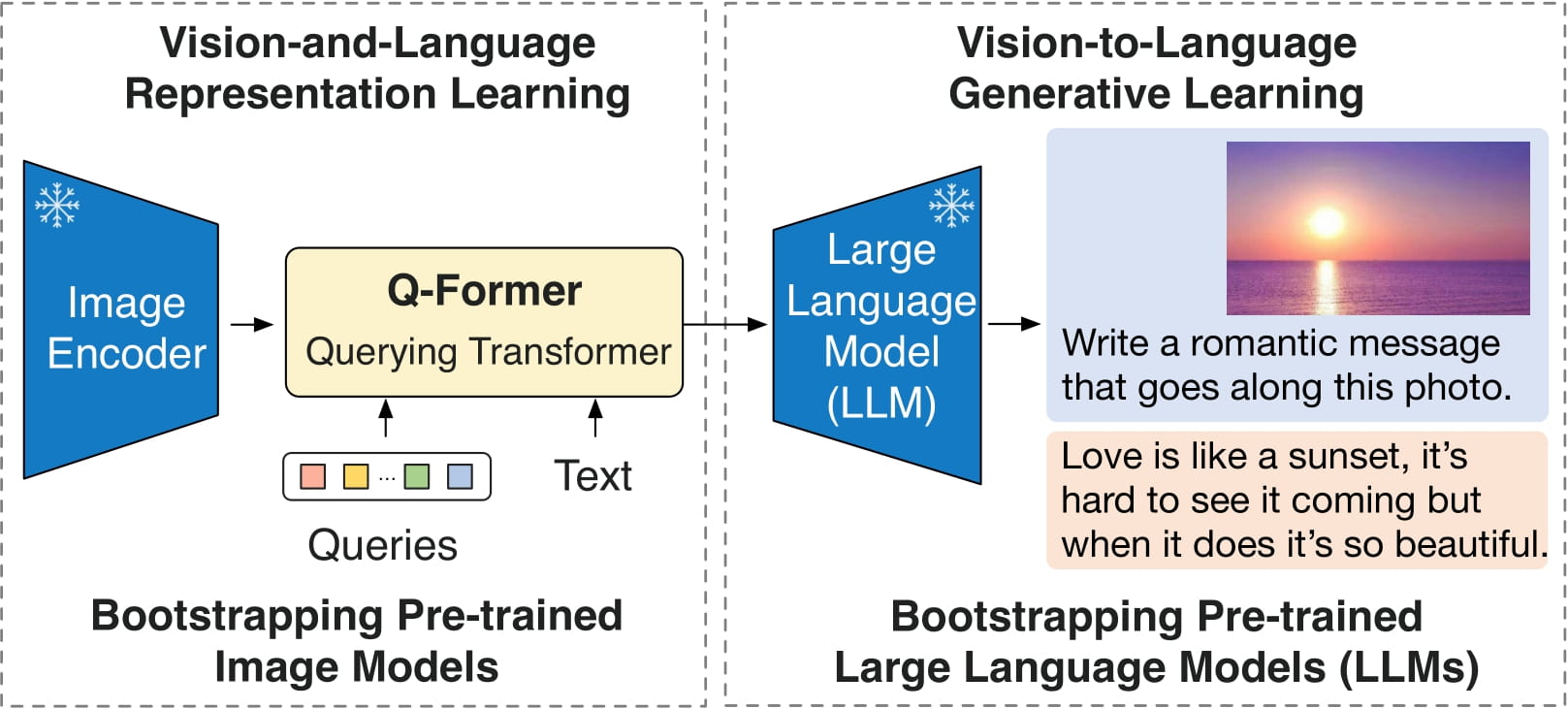

BLIP-2 architecture. Taken from the original paper.

diff --git a/docs/source/en/model_doc/blip.md b/docs/source/en/model_doc/blip.md

index 13a2a5731a5f..5e727050f6ee 100644

--- a/docs/source/en/model_doc/blip.md

+++ b/docs/source/en/model_doc/blip.md

@@ -25,7 +25,6 @@ rendered properly in your Markdown viewer.

[BLIP](https://huggingface.co/papers/2201.12086) (Bootstrapped Language-Image Pretraining) is a vision-language pretraining (VLP) framework designed for *both* understanding and generation tasks. Most existing pretrained models are only good at one or the other. It uses a captioner to generate captions and a filter to remove the noisy captions. This increases training data quality and more effectively uses the messy web data.

-

You can find all the original BLIP checkpoints under the [BLIP](https://huggingface.co/collections/Salesforce/blip-models-65242f40f1491fbf6a9e9472) collection.

> [!TIP]

@@ -129,7 +128,7 @@ Refer to this [notebook](https://github.com/huggingface/notebooks/blob/main/exam

## BlipTextLMHeadModel

[[autodoc]] BlipTextLMHeadModel

-- forward

+ - forward

## BlipVisionModel

diff --git a/docs/source/en/model_doc/bloom.md b/docs/source/en/model_doc/bloom.md

index 805379338e32..51e2970c25f6 100644

--- a/docs/source/en/model_doc/bloom.md

+++ b/docs/source/en/model_doc/bloom.md

@@ -43,17 +43,19 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

- [`BloomForCausalLM`] is supported by this [causal language modeling example script](https://github.com/huggingface/transformers/tree/main/examples/pytorch/language-modeling#gpt-2gpt-and-causal-language-modeling) and [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/language_modeling.ipynb).

See also:

+

- [Causal language modeling task guide](../tasks/language_modeling)

- [Text classification task guide](../tasks/sequence_classification)

- [Token classification task guide](../tasks/token_classification)

- [Question answering task guide](../tasks/question_answering)

-

⚡️ Inference

+

- A blog on [Optimization story: Bloom inference](https://huggingface.co/blog/bloom-inference-optimization).

- A blog on [Incredibly Fast BLOOM Inference with DeepSpeed and Accelerate](https://huggingface.co/blog/bloom-inference-pytorch-scripts).

⚙️ Training

+

- A blog on [The Technology Behind BLOOM Training](https://huggingface.co/blog/bloom-megatron-deepspeed).

## BloomConfig

diff --git a/docs/source/en/model_doc/blt.md b/docs/source/en/model_doc/blt.md

new file mode 100644

index 000000000000..254cf6c0f44a

--- /dev/null

+++ b/docs/source/en/model_doc/blt.md

@@ -0,0 +1,97 @@

+

+*This model was released on 2024-12-13 and added to Hugging Face Transformers on 2025-09-19.*

+

+

+alt="drawing" width="600"/>

BLIP-2 architecture. Taken from the original paper.

diff --git a/docs/source/en/model_doc/blip.md b/docs/source/en/model_doc/blip.md

index 13a2a5731a5f..5e727050f6ee 100644

--- a/docs/source/en/model_doc/blip.md

+++ b/docs/source/en/model_doc/blip.md

@@ -25,7 +25,6 @@ rendered properly in your Markdown viewer.

[BLIP](https://huggingface.co/papers/2201.12086) (Bootstrapped Language-Image Pretraining) is a vision-language pretraining (VLP) framework designed for *both* understanding and generation tasks. Most existing pretrained models are only good at one or the other. It uses a captioner to generate captions and a filter to remove the noisy captions. This increases training data quality and more effectively uses the messy web data.

-

You can find all the original BLIP checkpoints under the [BLIP](https://huggingface.co/collections/Salesforce/blip-models-65242f40f1491fbf6a9e9472) collection.

> [!TIP]

@@ -129,7 +128,7 @@ Refer to this [notebook](https://github.com/huggingface/notebooks/blob/main/exam

## BlipTextLMHeadModel

[[autodoc]] BlipTextLMHeadModel

-- forward

+ - forward

## BlipVisionModel

diff --git a/docs/source/en/model_doc/bloom.md b/docs/source/en/model_doc/bloom.md

index 805379338e32..51e2970c25f6 100644

--- a/docs/source/en/model_doc/bloom.md

+++ b/docs/source/en/model_doc/bloom.md

@@ -43,17 +43,19 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

- [`BloomForCausalLM`] is supported by this [causal language modeling example script](https://github.com/huggingface/transformers/tree/main/examples/pytorch/language-modeling#gpt-2gpt-and-causal-language-modeling) and [notebook](https://colab.research.google.com/github/huggingface/notebooks/blob/main/examples/language_modeling.ipynb).

See also:

+

- [Causal language modeling task guide](../tasks/language_modeling)

- [Text classification task guide](../tasks/sequence_classification)

- [Token classification task guide](../tasks/token_classification)

- [Question answering task guide](../tasks/question_answering)

-

⚡️ Inference

+

- A blog on [Optimization story: Bloom inference](https://huggingface.co/blog/bloom-inference-optimization).

- A blog on [Incredibly Fast BLOOM Inference with DeepSpeed and Accelerate](https://huggingface.co/blog/bloom-inference-pytorch-scripts).

⚙️ Training

+

- A blog on [The Technology Behind BLOOM Training](https://huggingface.co/blog/bloom-megatron-deepspeed).

## BloomConfig

diff --git a/docs/source/en/model_doc/blt.md b/docs/source/en/model_doc/blt.md

new file mode 100644

index 000000000000..254cf6c0f44a

--- /dev/null

+++ b/docs/source/en/model_doc/blt.md

@@ -0,0 +1,97 @@

+

+*This model was released on 2024-12-13 and added to Hugging Face Transformers on 2025-09-19.*

+

+ +

+  +

+  +

+  +

+  +

+

-

-  @@ -52,7 +50,6 @@ alt="drawing" width="600"/>

This model was contributed by [joaogante](https://huggingface.co/joaogante) and [RaushanTurganbay](https://huggingface.co/RaushanTurganbay).

The original code can be found [here](https://github.com/facebookresearch/chameleon).

-

## Usage tips

- We advise users to use `padding_side="left"` when computing batched generation as it leads to more accurate results. Simply make sure to set `processor.tokenizer.padding_side = "left"` before generating.

diff --git a/docs/source/en/model_doc/chinese_clip.md b/docs/source/en/model_doc/chinese_clip.md

index 7ed4d503c00f..96b094ccd91b 100644

--- a/docs/source/en/model_doc/chinese_clip.md

+++ b/docs/source/en/model_doc/chinese_clip.md

@@ -119,4 +119,4 @@ Currently, following scales of pretrained Chinese-CLIP models are available on

## ChineseCLIPVisionModel

[[autodoc]] ChineseCLIPVisionModel

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/clipseg.md b/docs/source/en/model_doc/clipseg.md

index e27d49ffe484..099fd4fb1bac 100644

--- a/docs/source/en/model_doc/clipseg.md

+++ b/docs/source/en/model_doc/clipseg.md

@@ -47,7 +47,7 @@ can be formulated. Finally, we find our system to adapt well

to generalized queries involving affordances or properties*

@@ -52,7 +50,6 @@ alt="drawing" width="600"/>

This model was contributed by [joaogante](https://huggingface.co/joaogante) and [RaushanTurganbay](https://huggingface.co/RaushanTurganbay).

The original code can be found [here](https://github.com/facebookresearch/chameleon).

-

## Usage tips

- We advise users to use `padding_side="left"` when computing batched generation as it leads to more accurate results. Simply make sure to set `processor.tokenizer.padding_side = "left"` before generating.

diff --git a/docs/source/en/model_doc/chinese_clip.md b/docs/source/en/model_doc/chinese_clip.md

index 7ed4d503c00f..96b094ccd91b 100644

--- a/docs/source/en/model_doc/chinese_clip.md

+++ b/docs/source/en/model_doc/chinese_clip.md

@@ -119,4 +119,4 @@ Currently, following scales of pretrained Chinese-CLIP models are available on

## ChineseCLIPVisionModel

[[autodoc]] ChineseCLIPVisionModel

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/clipseg.md b/docs/source/en/model_doc/clipseg.md

index e27d49ffe484..099fd4fb1bac 100644

--- a/docs/source/en/model_doc/clipseg.md

+++ b/docs/source/en/model_doc/clipseg.md

@@ -47,7 +47,7 @@ can be formulated. Finally, we find our system to adapt well

to generalized queries involving affordances or properties*

+alt="drawing" width="600"/>

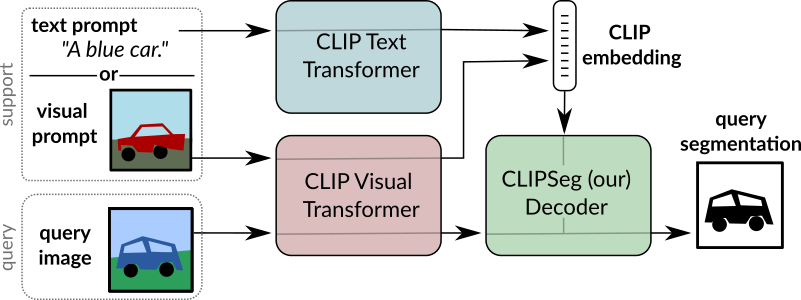

CLIPSeg overview. Taken from the original paper.

@@ -106,4 +106,4 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

## CLIPSegForImageSegmentation

[[autodoc]] CLIPSegForImageSegmentation

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/clvp.md b/docs/source/en/model_doc/clvp.md

index 926438a3c1f5..eead4a546435 100644

--- a/docs/source/en/model_doc/clvp.md

+++ b/docs/source/en/model_doc/clvp.md

@@ -29,29 +29,25 @@ The abstract from the paper is the following:

*In recent years, the field of image generation has been revolutionized by the application of autoregressive transformers and DDPMs. These approaches model the process of image generation as a step-wise probabilistic processes and leverage large amounts of compute and data to learn the image distribution. This methodology of improving performance need not be confined to images. This paper describes a way to apply advances in the image generative domain to speech synthesis. The result is TorToise - an expressive, multi-voice text-to-speech system.*

-

This model was contributed by [Susnato Dhar](https://huggingface.co/susnato).

The original code can be found [here](https://github.com/neonbjb/tortoise-tts).

-

## Usage tips

1. CLVP is an integral part of the Tortoise TTS model.

2. CLVP can be used to compare different generated speech candidates with the provided text, and the best speech tokens are forwarded to the diffusion model.

3. The use of the [`ClvpModelForConditionalGeneration.generate()`] method is strongly recommended for tortoise usage.

-4. Note that the CLVP model expects the audio to be sampled at 22.05 kHz contrary to other audio models which expects 16 kHz.

-

+4. Note that the CLVP model expects the audio to be sampled at 22.05 kHz contrary to other audio models which expects 16 kHz.

## Brief Explanation:

- The [`ClvpTokenizer`] tokenizes the text input, and the [`ClvpFeatureExtractor`] extracts the log mel-spectrogram from the desired audio.

- [`ClvpConditioningEncoder`] takes those text tokens and audio representations and converts them into embeddings conditioned on the text and audio.

- The [`ClvpForCausalLM`] uses those embeddings to generate multiple speech candidates.

-- Each speech candidate is passed through the speech encoder ([`ClvpEncoder`]) which converts them into a vector representation, and the text encoder ([`ClvpEncoder`]) converts the text tokens into the same latent space.

-- At the end, we compare each speech vector with the text vector to see which speech vector is most similar to the text vector.

+- Each speech candidate is passed through the speech encoder ([`ClvpEncoder`]) which converts them into a vector representation, and the text encoder ([`ClvpEncoder`]) converts the text tokens into the same latent space.

+- At the end, we compare each speech vector with the text vector to see which speech vector is most similar to the text vector.

- [`ClvpModelForConditionalGeneration.generate()`] compresses all of the logic described above into a single method.

-

Example :

```python

@@ -74,7 +70,6 @@ Example :

>>> generated_output = model.generate(**processor_output)

```

-

## ClvpConfig

[[autodoc]] ClvpConfig

@@ -128,4 +123,3 @@ Example :

## ClvpDecoder

[[autodoc]] ClvpDecoder

-

diff --git a/docs/source/en/model_doc/code_llama.md b/docs/source/en/model_doc/code_llama.md

index 60e9cb4c3cf2..a46e1f05b32a 100644

--- a/docs/source/en/model_doc/code_llama.md

+++ b/docs/source/en/model_doc/code_llama.md

@@ -143,6 +143,7 @@ visualizer("""def func(a, b):

- Infilling is only available in the 7B and 13B base models, and not in the Python, Instruct, 34B, or 70B models.

- Use the `

+alt="drawing" width="600"/>

CLIPSeg overview. Taken from the original paper.

@@ -106,4 +106,4 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

## CLIPSegForImageSegmentation

[[autodoc]] CLIPSegForImageSegmentation

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/clvp.md b/docs/source/en/model_doc/clvp.md

index 926438a3c1f5..eead4a546435 100644

--- a/docs/source/en/model_doc/clvp.md

+++ b/docs/source/en/model_doc/clvp.md

@@ -29,29 +29,25 @@ The abstract from the paper is the following:

*In recent years, the field of image generation has been revolutionized by the application of autoregressive transformers and DDPMs. These approaches model the process of image generation as a step-wise probabilistic processes and leverage large amounts of compute and data to learn the image distribution. This methodology of improving performance need not be confined to images. This paper describes a way to apply advances in the image generative domain to speech synthesis. The result is TorToise - an expressive, multi-voice text-to-speech system.*

-

This model was contributed by [Susnato Dhar](https://huggingface.co/susnato).

The original code can be found [here](https://github.com/neonbjb/tortoise-tts).

-

## Usage tips

1. CLVP is an integral part of the Tortoise TTS model.

2. CLVP can be used to compare different generated speech candidates with the provided text, and the best speech tokens are forwarded to the diffusion model.

3. The use of the [`ClvpModelForConditionalGeneration.generate()`] method is strongly recommended for tortoise usage.

-4. Note that the CLVP model expects the audio to be sampled at 22.05 kHz contrary to other audio models which expects 16 kHz.

-

+4. Note that the CLVP model expects the audio to be sampled at 22.05 kHz contrary to other audio models which expects 16 kHz.

## Brief Explanation:

- The [`ClvpTokenizer`] tokenizes the text input, and the [`ClvpFeatureExtractor`] extracts the log mel-spectrogram from the desired audio.

- [`ClvpConditioningEncoder`] takes those text tokens and audio representations and converts them into embeddings conditioned on the text and audio.

- The [`ClvpForCausalLM`] uses those embeddings to generate multiple speech candidates.

-- Each speech candidate is passed through the speech encoder ([`ClvpEncoder`]) which converts them into a vector representation, and the text encoder ([`ClvpEncoder`]) converts the text tokens into the same latent space.

-- At the end, we compare each speech vector with the text vector to see which speech vector is most similar to the text vector.

+- Each speech candidate is passed through the speech encoder ([`ClvpEncoder`]) which converts them into a vector representation, and the text encoder ([`ClvpEncoder`]) converts the text tokens into the same latent space.

+- At the end, we compare each speech vector with the text vector to see which speech vector is most similar to the text vector.

- [`ClvpModelForConditionalGeneration.generate()`] compresses all of the logic described above into a single method.

-

Example :

```python

@@ -74,7 +70,6 @@ Example :

>>> generated_output = model.generate(**processor_output)

```

-

## ClvpConfig

[[autodoc]] ClvpConfig

@@ -128,4 +123,3 @@ Example :

## ClvpDecoder

[[autodoc]] ClvpDecoder

-

diff --git a/docs/source/en/model_doc/code_llama.md b/docs/source/en/model_doc/code_llama.md

index 60e9cb4c3cf2..a46e1f05b32a 100644

--- a/docs/source/en/model_doc/code_llama.md

+++ b/docs/source/en/model_doc/code_llama.md

@@ -143,6 +143,7 @@ visualizer("""def func(a, b):

- Infilling is only available in the 7B and 13B base models, and not in the Python, Instruct, 34B, or 70B models.

- Use the `

-

-  +

+  -## Overview

+## Overview

DePlot was proposed in the paper [DePlot: One-shot visual language reasoning by plot-to-table translation](https://huggingface.co/papers/2212.10505) from Fangyu Liu, Julian Martin Eisenschlos, Francesco Piccinno, Syrine Krichene, Chenxi Pang, Kenton Lee, Mandar Joshi, Wenhu Chen, Nigel Collier, Yasemin Altun.

@@ -36,8 +36,7 @@ DePlot is a Visual Question Answering subset of `Pix2Struct` architecture. It re

Currently one checkpoint is available for DePlot:

-- `google/deplot`: DePlot fine-tuned on ChartQA dataset

-

+- `google/deplot`: DePlot fine-tuned on ChartQA dataset

```python

from transformers import AutoProcessor, Pix2StructForConditionalGeneration

@@ -57,6 +56,7 @@ print(processor.decode(predictions[0], skip_special_tokens=True))

## Fine-tuning

To fine-tune DePlot, refer to the pix2struct [fine-tuning notebook](https://github.com/huggingface/notebooks/blob/main/examples/image_captioning_pix2struct.ipynb). For `Pix2Struct` models, we have found out that fine-tuning the model with Adafactor and cosine learning rate scheduler leads to faster convergence:

+

```python

from transformers.optimization import Adafactor, get_cosine_schedule_with_warmup

@@ -68,4 +68,4 @@ scheduler = get_cosine_schedule_with_warmup(optimizer, num_warmup_steps=1000, nu

DePlot is a model trained using `Pix2Struct` architecture. For API reference, see [`Pix2Struct` documentation](pix2struct).

-

\ No newline at end of file

+

diff --git a/docs/source/en/model_doc/depth_anything.md b/docs/source/en/model_doc/depth_anything.md

index 5ac7007595ff..44774c961eaa 100644

--- a/docs/source/en/model_doc/depth_anything.md

+++ b/docs/source/en/model_doc/depth_anything.md

@@ -86,4 +86,4 @@ Image.fromarray(depth.astype("uint8"))

## DepthAnythingForDepthEstimation

[[autodoc]] DepthAnythingForDepthEstimation

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/depth_anything_v2.md b/docs/source/en/model_doc/depth_anything_v2.md

index e8637ba6192c..fbcf2248f658 100644

--- a/docs/source/en/model_doc/depth_anything_v2.md

+++ b/docs/source/en/model_doc/depth_anything_v2.md

@@ -110,4 +110,4 @@ If you're interested in submitting a resource to be included here, please feel f

## DepthAnythingForDepthEstimation

[[autodoc]] DepthAnythingForDepthEstimation

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/depth_pro.md b/docs/source/en/model_doc/depth_pro.md

index 85423359ceb0..c19703cdccc3 100644

--- a/docs/source/en/model_doc/depth_pro.md

+++ b/docs/source/en/model_doc/depth_pro.md

@@ -84,12 +84,13 @@ alt="drawing" width="600"/>

The `DepthProForDepthEstimation` model uses a `DepthProEncoder`, for encoding the input image and a `FeatureFusionStage` for fusing the output features from encoder.

The `DepthProEncoder` further uses two encoders:

+

- `patch_encoder`

- - Input image is scaled with multiple ratios, as specified in the `scaled_images_ratios` configuration.

- - Each scaled image is split into smaller **patches** of size `patch_size` with overlapping areas determined by `scaled_images_overlap_ratios`.

- - These patches are processed by the **`patch_encoder`**

+ - Input image is scaled with multiple ratios, as specified in the `scaled_images_ratios` configuration.

+ - Each scaled image is split into smaller **patches** of size `patch_size` with overlapping areas determined by `scaled_images_overlap_ratios`.

+ - These patches are processed by the **`patch_encoder`**

- `image_encoder`

- - Input image is also rescaled to `patch_size` and processed by the **`image_encoder`**

+ - Input image is also rescaled to `patch_size` and processed by the **`image_encoder`**

Both these encoders can be configured via `patch_model_config` and `image_model_config` respectively, both of which are separate `Dinov2Model` by default.

@@ -102,12 +103,14 @@ The network is supplemented with a focal length estimation head. A small convolu

The `use_fov_model` parameter in `DepthProConfig` controls whether **FOV prediction** is enabled. By default, it is set to `False` to conserve memory and computation. When enabled, the **FOV encoder** is instantiated based on the `fov_model_config` parameter, which defaults to a `Dinov2Model`. The `use_fov_model` parameter can also be passed when initializing the `DepthProForDepthEstimation` model.

The pretrained model at checkpoint `apple/DepthPro-hf` uses the FOV encoder. To use the pretrained-model without FOV encoder, set `use_fov_model=False` when loading the model, which saves computation.

+

```py

>>> from transformers import DepthProForDepthEstimation

>>> model = DepthProForDepthEstimation.from_pretrained("apple/DepthPro-hf", use_fov_model=False)

```

To instantiate a new model with FOV encoder, set `use_fov_model=True` in the config.

+

```py

>>> from transformers import DepthProConfig, DepthProForDepthEstimation

>>> config = DepthProConfig(use_fov_model=True)

@@ -115,6 +118,7 @@ To instantiate a new model with FOV encoder, set `use_fov_model=True` in the con

```

Or set `use_fov_model=True` when initializing the model, which overrides the value in config.

+

```py

>>> from transformers import DepthProConfig, DepthProForDepthEstimation

>>> config = DepthProConfig()

@@ -123,13 +127,13 @@ Or set `use_fov_model=True` when initializing the model, which overrides the val

### Using Scaled Dot Product Attention (SDPA)

-PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

-encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

-[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

+PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

+encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

+[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

-SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

+SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```py

@@ -156,8 +160,8 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

- Official Implementation: [apple/ml-depth-pro](https://github.com/apple/ml-depth-pro)

- DepthPro Inference Notebook: [DepthPro Inference](https://github.com/qubvel/transformers-notebooks/blob/main/notebooks/DepthPro_inference.ipynb)

- DepthPro for Super Resolution and Image Segmentation

- - Read blog on Medium: [Depth Pro: Beyond Depth](https://medium.com/@raoarmaghanshakir040/depth-pro-beyond-depth-9d822fc557ba)

- - Code on Github: [geetu040/depthpro-beyond-depth](https://github.com/geetu040/depthpro-beyond-depth)

+ - Read blog on Medium: [Depth Pro: Beyond Depth](https://medium.com/@raoarmaghanshakir040/depth-pro-beyond-depth-9d822fc557ba)

+ - Code on Github: [geetu040/depthpro-beyond-depth](https://github.com/geetu040/depthpro-beyond-depth)

If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

diff --git a/docs/source/en/model_doc/detr.md b/docs/source/en/model_doc/detr.md

index 425ab0f04c51..46c9d3dadce6 100644

--- a/docs/source/en/model_doc/detr.md

+++ b/docs/source/en/model_doc/detr.md

@@ -16,9 +16,9 @@ rendered properly in your Markdown viewer.

*This model was released on 2020-05-26 and added to Hugging Face Transformers on 2021-06-09.*

-## Overview

+## Overview

DePlot was proposed in the paper [DePlot: One-shot visual language reasoning by plot-to-table translation](https://huggingface.co/papers/2212.10505) from Fangyu Liu, Julian Martin Eisenschlos, Francesco Piccinno, Syrine Krichene, Chenxi Pang, Kenton Lee, Mandar Joshi, Wenhu Chen, Nigel Collier, Yasemin Altun.

@@ -36,8 +36,7 @@ DePlot is a Visual Question Answering subset of `Pix2Struct` architecture. It re

Currently one checkpoint is available for DePlot:

-- `google/deplot`: DePlot fine-tuned on ChartQA dataset

-

+- `google/deplot`: DePlot fine-tuned on ChartQA dataset

```python

from transformers import AutoProcessor, Pix2StructForConditionalGeneration

@@ -57,6 +56,7 @@ print(processor.decode(predictions[0], skip_special_tokens=True))

## Fine-tuning

To fine-tune DePlot, refer to the pix2struct [fine-tuning notebook](https://github.com/huggingface/notebooks/blob/main/examples/image_captioning_pix2struct.ipynb). For `Pix2Struct` models, we have found out that fine-tuning the model with Adafactor and cosine learning rate scheduler leads to faster convergence:

+

```python

from transformers.optimization import Adafactor, get_cosine_schedule_with_warmup

@@ -68,4 +68,4 @@ scheduler = get_cosine_schedule_with_warmup(optimizer, num_warmup_steps=1000, nu

DePlot is a model trained using `Pix2Struct` architecture. For API reference, see [`Pix2Struct` documentation](pix2struct).

-

\ No newline at end of file

+

diff --git a/docs/source/en/model_doc/depth_anything.md b/docs/source/en/model_doc/depth_anything.md

index 5ac7007595ff..44774c961eaa 100644

--- a/docs/source/en/model_doc/depth_anything.md

+++ b/docs/source/en/model_doc/depth_anything.md

@@ -86,4 +86,4 @@ Image.fromarray(depth.astype("uint8"))

## DepthAnythingForDepthEstimation

[[autodoc]] DepthAnythingForDepthEstimation

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/depth_anything_v2.md b/docs/source/en/model_doc/depth_anything_v2.md

index e8637ba6192c..fbcf2248f658 100644

--- a/docs/source/en/model_doc/depth_anything_v2.md

+++ b/docs/source/en/model_doc/depth_anything_v2.md

@@ -110,4 +110,4 @@ If you're interested in submitting a resource to be included here, please feel f

## DepthAnythingForDepthEstimation

[[autodoc]] DepthAnythingForDepthEstimation

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/depth_pro.md b/docs/source/en/model_doc/depth_pro.md

index 85423359ceb0..c19703cdccc3 100644

--- a/docs/source/en/model_doc/depth_pro.md

+++ b/docs/source/en/model_doc/depth_pro.md

@@ -84,12 +84,13 @@ alt="drawing" width="600"/>

The `DepthProForDepthEstimation` model uses a `DepthProEncoder`, for encoding the input image and a `FeatureFusionStage` for fusing the output features from encoder.

The `DepthProEncoder` further uses two encoders:

+

- `patch_encoder`

- - Input image is scaled with multiple ratios, as specified in the `scaled_images_ratios` configuration.

- - Each scaled image is split into smaller **patches** of size `patch_size` with overlapping areas determined by `scaled_images_overlap_ratios`.

- - These patches are processed by the **`patch_encoder`**

+ - Input image is scaled with multiple ratios, as specified in the `scaled_images_ratios` configuration.

+ - Each scaled image is split into smaller **patches** of size `patch_size` with overlapping areas determined by `scaled_images_overlap_ratios`.

+ - These patches are processed by the **`patch_encoder`**

- `image_encoder`

- - Input image is also rescaled to `patch_size` and processed by the **`image_encoder`**

+ - Input image is also rescaled to `patch_size` and processed by the **`image_encoder`**

Both these encoders can be configured via `patch_model_config` and `image_model_config` respectively, both of which are separate `Dinov2Model` by default.

@@ -102,12 +103,14 @@ The network is supplemented with a focal length estimation head. A small convolu

The `use_fov_model` parameter in `DepthProConfig` controls whether **FOV prediction** is enabled. By default, it is set to `False` to conserve memory and computation. When enabled, the **FOV encoder** is instantiated based on the `fov_model_config` parameter, which defaults to a `Dinov2Model`. The `use_fov_model` parameter can also be passed when initializing the `DepthProForDepthEstimation` model.

The pretrained model at checkpoint `apple/DepthPro-hf` uses the FOV encoder. To use the pretrained-model without FOV encoder, set `use_fov_model=False` when loading the model, which saves computation.

+

```py

>>> from transformers import DepthProForDepthEstimation

>>> model = DepthProForDepthEstimation.from_pretrained("apple/DepthPro-hf", use_fov_model=False)

```

To instantiate a new model with FOV encoder, set `use_fov_model=True` in the config.

+

```py

>>> from transformers import DepthProConfig, DepthProForDepthEstimation

>>> config = DepthProConfig(use_fov_model=True)

@@ -115,6 +118,7 @@ To instantiate a new model with FOV encoder, set `use_fov_model=True` in the con

```

Or set `use_fov_model=True` when initializing the model, which overrides the value in config.

+

```py

>>> from transformers import DepthProConfig, DepthProForDepthEstimation

>>> config = DepthProConfig()

@@ -123,13 +127,13 @@ Or set `use_fov_model=True` when initializing the model, which overrides the val

### Using Scaled Dot Product Attention (SDPA)

-PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

-encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

-[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

+PyTorch includes a native scaled dot-product attention (SDPA) operator as part of `torch.nn.functional`. This function

+encompasses several implementations that can be applied depending on the inputs and the hardware in use. See the

+[official documentation](https://pytorch.org/docs/stable/generated/torch.nn.functional.scaled_dot_product_attention.html)

or the [GPU Inference](https://huggingface.co/docs/transformers/main/en/perf_infer_gpu_one#pytorch-scaled-dot-product-attention)

page for more information.

-SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

+SDPA is used by default for `torch>=2.1.1` when an implementation is available, but you may also set

`attn_implementation="sdpa"` in `from_pretrained()` to explicitly request SDPA to be used.

```py

@@ -156,8 +160,8 @@ A list of official Hugging Face and community (indicated by 🌎) resources to h

- Official Implementation: [apple/ml-depth-pro](https://github.com/apple/ml-depth-pro)

- DepthPro Inference Notebook: [DepthPro Inference](https://github.com/qubvel/transformers-notebooks/blob/main/notebooks/DepthPro_inference.ipynb)

- DepthPro for Super Resolution and Image Segmentation

- - Read blog on Medium: [Depth Pro: Beyond Depth](https://medium.com/@raoarmaghanshakir040/depth-pro-beyond-depth-9d822fc557ba)

- - Code on Github: [geetu040/depthpro-beyond-depth](https://github.com/geetu040/depthpro-beyond-depth)

+ - Read blog on Medium: [Depth Pro: Beyond Depth](https://medium.com/@raoarmaghanshakir040/depth-pro-beyond-depth-9d822fc557ba)

+ - Code on Github: [geetu040/depthpro-beyond-depth](https://github.com/geetu040/depthpro-beyond-depth)

If you're interested in submitting a resource to be included here, please feel free to open a Pull Request and we'll review it! The resource should ideally demonstrate something new instead of duplicating an existing resource.

diff --git a/docs/source/en/model_doc/detr.md b/docs/source/en/model_doc/detr.md

index 425ab0f04c51..46c9d3dadce6 100644

--- a/docs/source/en/model_doc/detr.md

+++ b/docs/source/en/model_doc/detr.md

@@ -16,9 +16,9 @@ rendered properly in your Markdown viewer.

*This model was released on 2020-05-26 and added to Hugging Face Transformers on 2021-06-09.*

-

-  +

+  +

+  +

+  +

+  +

+  +

+  +

+  -

## Usage Tips

### Generate text

@@ -84,7 +83,6 @@ generate_text = tokenizer.decode(output_ids, skip_special_tokens=True)

This model was contributed by [Anton Vlasjuk](https://huggingface.co/AntonV).

The original code can be found [here](https://github.com/PaddlePaddle/ERNIE).

-

## Ernie4_5Config

[[autodoc]] Ernie4_5Config

diff --git a/docs/source/en/model_doc/ernie4_5_moe.md b/docs/source/en/model_doc/ernie4_5_moe.md

index 20c4dcfd5435..fb6b8d791bec 100644

--- a/docs/source/en/model_doc/ernie4_5_moe.md

+++ b/docs/source/en/model_doc/ernie4_5_moe.md

@@ -40,7 +40,6 @@ Other models from the family can be found at [Ernie 4.5](./ernie4_5).

-

## Usage Tips

### Generate text

@@ -84,7 +83,6 @@ generate_text = tokenizer.decode(output_ids, skip_special_tokens=True)

This model was contributed by [Anton Vlasjuk](https://huggingface.co/AntonV).

The original code can be found [here](https://github.com/PaddlePaddle/ERNIE).

-

## Ernie4_5Config

[[autodoc]] Ernie4_5Config

diff --git a/docs/source/en/model_doc/ernie4_5_moe.md b/docs/source/en/model_doc/ernie4_5_moe.md

index 20c4dcfd5435..fb6b8d791bec 100644

--- a/docs/source/en/model_doc/ernie4_5_moe.md

+++ b/docs/source/en/model_doc/ernie4_5_moe.md

@@ -40,7 +40,6 @@ Other models from the family can be found at [Ernie 4.5](./ernie4_5).

-

## Usage Tips

### Generate text

@@ -167,7 +166,6 @@ generate_text = tokenizer.decode(output_ids, skip_special_tokens=True)

This model was contributed by [Anton Vlasjuk](https://huggingface.co/AntonV).

The original code can be found [here](https://github.com/PaddlePaddle/ERNIE).

-

## Ernie4_5_MoeConfig

[[autodoc]] Ernie4_5_MoeConfig

diff --git a/docs/source/en/model_doc/ernie_m.md b/docs/source/en/model_doc/ernie_m.md

index 508fe2f596b2..e044614e7644 100644

--- a/docs/source/en/model_doc/ernie_m.md

+++ b/docs/source/en/model_doc/ernie_m.md

@@ -40,7 +40,6 @@ The abstract from the paper is the following:

*Recent studies have demonstrated that pre-trained cross-lingual models achieve impressive performance in downstream cross-lingual tasks. This improvement benefits from learning a large amount of monolingual and parallel corpora. Although it is generally acknowledged that parallel corpora are critical for improving the model performance, existing methods are often constrained by the size of parallel corpora, especially for lowresource languages. In this paper, we propose ERNIE-M, a new training method that encourages the model to align the representation of multiple languages with monolingual corpora, to overcome the constraint that the parallel corpus size places on the model performance. Our key insight is to integrate back-translation into the pre-training process. We generate pseudo-parallel sentence pairs on a monolingual corpus to enable the learning of semantic alignments between different languages, thereby enhancing the semantic modeling of cross-lingual models. Experimental results show that ERNIE-M outperforms existing cross-lingual models and delivers new state-of-the-art results in various cross-lingual downstream tasks.*

This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). The original code can be found [here](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/paddlenlp/transformers/ernie_m).

-

## Usage tips

- Ernie-M is a BERT-like model so it is a stacked Transformer Encoder.

@@ -59,7 +58,6 @@ This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). Th

[[autodoc]] ErnieMConfig

-

## ErnieMTokenizer

[[autodoc]] ErnieMTokenizer

@@ -68,7 +66,6 @@ This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). Th

- create_token_type_ids_from_sequences

- save_vocabulary

-

## ErnieMModel

[[autodoc]] ErnieMModel

@@ -79,19 +76,16 @@ This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). Th

[[autodoc]] ErnieMForSequenceClassification

- forward

-

## ErnieMForMultipleChoice

[[autodoc]] ErnieMForMultipleChoice

- forward

-

## ErnieMForTokenClassification

[[autodoc]] ErnieMForTokenClassification

- forward

-

## ErnieMForQuestionAnswering

[[autodoc]] ErnieMForQuestionAnswering

diff --git a/docs/source/en/model_doc/esm.md b/docs/source/en/model_doc/esm.md

index e83e2d5aa1da..a6190a71f020 100644

--- a/docs/source/en/model_doc/esm.md

+++ b/docs/source/en/model_doc/esm.md

@@ -44,12 +44,10 @@ sequence alignment (MSA) step at inference time, which means that ESMFold checkp

they do not require a database of known protein sequences and structures with associated external query tools

to make predictions, and are much faster as a result.

-

The abstract from

"Biological structure and function emerge from scaling unsupervised learning to 250

million protein sequences" is

-

*In the field of artificial intelligence, a combination of scale in data and model capacity enabled by unsupervised

learning has led to major advances in representation learning and statistical generation. In the life sciences, the

anticipated growth of sequencing promises unprecedented data on natural sequence diversity. Protein language modeling

@@ -63,7 +61,6 @@ can be identified by linear projections. Representation learning produces featur

applications, enabling state-of-the-art supervised prediction of mutational effect and secondary structure and

improving state-of-the-art features for long-range contact prediction.*

-

The abstract from

"Language models of protein sequences at the scale of evolution enable accurate structure prediction" is

diff --git a/docs/source/en/model_doc/evolla.md b/docs/source/en/model_doc/evolla.md

index a39103a06d12..ea8605050599 100644

--- a/docs/source/en/model_doc/evolla.md

+++ b/docs/source/en/model_doc/evolla.md

@@ -25,7 +25,7 @@ Evolla is an advanced 80-billion-parameter protein-language generative model des

The abstract from the paper is the following:

-*Proteins, nature’s intricate molecular machines, are the products of billions of years of evolution and play fundamental roles in sustaining life. Yet, deciphering their molecular language - that is, understanding how protein sequences and structures encode and determine biological functions - remains a corner-stone challenge in modern biology. Here, we introduce Evolla, an 80 billion frontier protein-language generative model designed to decode the molecular language of proteins. By integrating information from protein sequences, structures, and user queries, Evolla generates precise and contextually nuanced insights into protein function. A key innovation of Evolla lies in its training on an unprecedented AI-generated dataset: 546 million protein question-answer pairs and 150 billion word tokens, designed to reflect the immense complexity and functional diversity of proteins. Post-pretraining, Evolla integrates Direct Preference Optimization (DPO) to refine the model based on preference signals and Retrieval-Augmented Generation (RAG) for external knowledge incorporation, improving response quality and relevance. To evaluate its performance, we propose a novel framework, Instructional Response Space (IRS), demonstrating that Evolla delivers expert-level insights, advancing research in proteomics and functional genomics while shedding light on the molecular logic encoded in proteins. The online demo is available at http://www.chat-protein.com/.*

+*Proteins, nature's intricate molecular machines, are the products of billions of years of evolution and play fundamental roles in sustaining life. Yet, deciphering their molecular language - that is, understanding how protein sequences and structures encode and determine biological functions - remains a corner-stone challenge in modern biology. Here, we introduce Evolla, an 80 billion frontier protein-language generative model designed to decode the molecular language of proteins. By integrating information from protein sequences, structures, and user queries, Evolla generates precise and contextually nuanced insights into protein function. A key innovation of Evolla lies in its training on an unprecedented AI-generated dataset: 546 million protein question-answer pairs and 150 billion word tokens, designed to reflect the immense complexity and functional diversity of proteins. Post-pretraining, Evolla integrates Direct Preference Optimization (DPO) to refine the model based on preference signals and Retrieval-Augmented Generation (RAG) for external knowledge incorporation, improving response quality and relevance. To evaluate its performance, we propose a novel framework, Instructional Response Space (IRS), demonstrating that Evolla delivers expert-level insights, advancing research in proteomics and functional genomics while shedding light on the molecular logic encoded in proteins. The online demo is available at http://www.chat-protein.com/.*

Examples:

@@ -75,7 +75,6 @@ Tips:

- This model was contributed by [Xibin Bayes Zhou](https://huggingface.co/XibinBayesZhou).

- The original code can be found [here](https://github.com/westlake-repl/Evolla).

-

## EvollaConfig

[[autodoc]] EvollaConfig

diff --git a/docs/source/en/model_doc/exaone4.md b/docs/source/en/model_doc/exaone4.md

index 69d7ee0b2a81..9482f5be2c06 100644

--- a/docs/source/en/model_doc/exaone4.md

+++ b/docs/source/en/model_doc/exaone4.md

@@ -20,7 +20,7 @@ rendered properly in your Markdown viewer.

## Overview

**[EXAONE 4.0](https://github.com/LG-AI-EXAONE/EXAONE-4.0)** model is the language model, which integrates a **Non-reasoning mode** and **Reasoning mode** to achieve both the excellent usability of [EXAONE 3.5](https://github.com/LG-AI-EXAONE/EXAONE-3.5) and the advanced reasoning abilities of [EXAONE Deep](https://github.com/LG-AI-EXAONE/EXAONE-Deep). To pave the way for the agentic AI era, EXAONE 4.0 incorporates essential features such as agentic tool use, and its multilingual capabilities are extended

-to support Spanish in addition to English and Korean.

+to support Spanish in addition to English and Korean.

The EXAONE 4.0 model series consists of two sizes: a mid-size **32B** model optimized for high performance, and a small-size **1.2B** model designed for on-device applications.

@@ -33,7 +33,6 @@ For more details, please refer to our [technical report](https://huggingface.co/

All model weights including quantized versions are available at [Huggingface Collections](https://huggingface.co/collections/LGAI-EXAONE/exaone-40-686b2e0069800c835ed48375).

-

## Model Details

### Model Specifications

@@ -57,7 +56,6 @@ All model weights including quantized versions are available at [Huggingface Col

| Tied word embedding | False | True |

| Knowledge cut-off | Nov. 2024 | Nov. 2024 |

-

## Usage tips

### Non-reasoning mode

@@ -206,4 +204,4 @@ print(tokenizer.decode(output[0]))

## Exaone4ForQuestionAnswering

[[autodoc]] Exaone4ForQuestionAnswering

- - forward

\ No newline at end of file

+ - forward

diff --git a/docs/source/en/model_doc/falcon3.md b/docs/source/en/model_doc/falcon3.md

index 368a5457ab6d..3d79a4e225dd 100644

--- a/docs/source/en/model_doc/falcon3.md

+++ b/docs/source/en/model_doc/falcon3.md

@@ -30,5 +30,6 @@ Depth up-scaling for improved reasoning: Building on recent studies on the effec

Knowledge distillation for better tiny models: To provide compact and efficient alternatives, we developed Falcon3-1B-Base and Falcon3-3B-Base by leveraging pruning and knowledge distillation techniques, using less than 100GT of curated high-quality data, thereby redefining pre-training efficiency.

## Resources

+

- [Blog post](https://huggingface.co/blog/falcon3)

- [Models on Huggingface](https://huggingface.co/collections/tiiuae/falcon3-67605ae03578be86e4e87026)

diff --git a/docs/source/en/model_doc/falcon_h1.md b/docs/source/en/model_doc/falcon_h1.md

index 981c00bd626b..48a647cd3797 100644

--- a/docs/source/en/model_doc/falcon_h1.md

+++ b/docs/source/en/model_doc/falcon_h1.md

@@ -21,7 +21,6 @@ The [FalconH1](https://huggingface.co/blog/tiiuae/falcon-h1) model was developed

This model was contributed by [DhiyaEddine](https://huggingface.co/DhiyaEddine), [ybelkada](https://huggingface.co/ybelkada), [JingweiZuo](https://huggingface.co/JingweiZuo), [IlyasChahed](https://huggingface.co/IChahed), and [MaksimVelikanov](https://huggingface.co/yellowvm).

The original code can be found [here](https://github.com/tiiuae/Falcon-H1).

-

## FalconH1Config

| Model | Depth | Dim | Attn Heads | KV | Mamba Heads | d_head | d_state | Ctx Len |

@@ -33,8 +32,6 @@ The original code can be found [here](https://github.com/tiiuae/Falcon-H1).

| H1 7B | 44 | 3072 | 12 | 2 | 24 | 128 / 128 | 256 | 256K |

| H1 34B | 72 | 5120 | 20 | 4 | 32 | 128 / 128 | 256 | 256K |

-

-

[[autodoc]] FalconH1Config

-*This model was released on 2025-07-09 and added to Hugging Face Transformers on 2025-09-15.*

+*This model was released on 2025-07-09 and added to Hugging Face Transformers on 2025-09-18.*

-

## Usage Tips

### Generate text

@@ -167,7 +166,6 @@ generate_text = tokenizer.decode(output_ids, skip_special_tokens=True)

This model was contributed by [Anton Vlasjuk](https://huggingface.co/AntonV).

The original code can be found [here](https://github.com/PaddlePaddle/ERNIE).

-

## Ernie4_5_MoeConfig

[[autodoc]] Ernie4_5_MoeConfig

diff --git a/docs/source/en/model_doc/ernie_m.md b/docs/source/en/model_doc/ernie_m.md

index 508fe2f596b2..e044614e7644 100644

--- a/docs/source/en/model_doc/ernie_m.md

+++ b/docs/source/en/model_doc/ernie_m.md

@@ -40,7 +40,6 @@ The abstract from the paper is the following:

*Recent studies have demonstrated that pre-trained cross-lingual models achieve impressive performance in downstream cross-lingual tasks. This improvement benefits from learning a large amount of monolingual and parallel corpora. Although it is generally acknowledged that parallel corpora are critical for improving the model performance, existing methods are often constrained by the size of parallel corpora, especially for lowresource languages. In this paper, we propose ERNIE-M, a new training method that encourages the model to align the representation of multiple languages with monolingual corpora, to overcome the constraint that the parallel corpus size places on the model performance. Our key insight is to integrate back-translation into the pre-training process. We generate pseudo-parallel sentence pairs on a monolingual corpus to enable the learning of semantic alignments between different languages, thereby enhancing the semantic modeling of cross-lingual models. Experimental results show that ERNIE-M outperforms existing cross-lingual models and delivers new state-of-the-art results in various cross-lingual downstream tasks.*

This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). The original code can be found [here](https://github.com/PaddlePaddle/PaddleNLP/tree/develop/paddlenlp/transformers/ernie_m).

-

## Usage tips

- Ernie-M is a BERT-like model so it is a stacked Transformer Encoder.

@@ -59,7 +58,6 @@ This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). Th

[[autodoc]] ErnieMConfig

-

## ErnieMTokenizer

[[autodoc]] ErnieMTokenizer

@@ -68,7 +66,6 @@ This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). Th

- create_token_type_ids_from_sequences

- save_vocabulary

-

## ErnieMModel

[[autodoc]] ErnieMModel

@@ -79,19 +76,16 @@ This model was contributed by [Susnato Dhar](https://huggingface.co/susnato). Th

[[autodoc]] ErnieMForSequenceClassification

- forward

-

## ErnieMForMultipleChoice

[[autodoc]] ErnieMForMultipleChoice

- forward

-

## ErnieMForTokenClassification

[[autodoc]] ErnieMForTokenClassification

- forward

-

## ErnieMForQuestionAnswering

[[autodoc]] ErnieMForQuestionAnswering

diff --git a/docs/source/en/model_doc/esm.md b/docs/source/en/model_doc/esm.md

index e83e2d5aa1da..a6190a71f020 100644

--- a/docs/source/en/model_doc/esm.md

+++ b/docs/source/en/model_doc/esm.md

@@ -44,12 +44,10 @@ sequence alignment (MSA) step at inference time, which means that ESMFold checkp

they do not require a database of known protein sequences and structures with associated external query tools