-

Notifications

You must be signed in to change notification settings - Fork 26

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Questions about training Gibson scenes #13

Comments

|

Hi @ZhengdiYu , thanks for the detailed post! Cars: Your analysis is good - for our experiments we initially had a larger learning rate as well, I altered it to stabilize training but it seems this was too radical. Thanks for pointing it out! Scenes: Q2. When do you plan to release the code for scenes? Or could you give us a brief introduction or guideline of how to train and test on scenes? Q3. Yes, using the same setup should be fine, details can be found in the supplementary. Q4. See my answer on Q1. We used a network checkpoint obtained from ShapeNet training, as initialization to speed up training. Q5. Do you use different dense pc generation strategies for cars and scenes? Do you use the same script for both of them? Summary: probably there is something off in your training code, maybe some alignment issue. Best, |

Hey @jchibane , Thank you for your detailed reply,and according to your reply: First of all, I think it might not be an alignment issue. Because I just use your pre-process script and adapt the filename and data_path of .npz data so that your dataloader can read the data of scenes, that's all I did. There is no transformation operation during training, just feed the '/pymesh_boundary_{}_samples.npz' the script produce to train the network. The only difference between car and scenes is the pre-processing, other scripts are exactly the same. Q1. Does this mean that I can load a pre-trained model from cars and then continue to train for 3¬4 days on scenes with 1e-4 lr? Dose this means we need a week to train scenes? Q4. This is what I meant: 0.02 and 0.003 is the loss of scenes which I got use your pre-trained model and my model trained on scenes, the pre-trained model has higher loss but better performance. Do you also load the learning rate? I'll keep on trying this one and keep updated. Q5. Isn't 90K points for a cube too much? Some of the scenes have hundreds of cubes. About your Summary: probably there is something off in your training code, maybe some alignment issue. Updates(7.7.2021): About qualitative examples, just like the title of each picture. I did some kinds of evaluation. Let me put it clearer:

Conclusion:

By the way, 2 possible bug in data.

Here I have 2 screen shots during dense_point_cloud generation to help you see the problem, I printed the pred_df in each refinement step: We can see that after each refinement step, the setting 2's df sometimes even would remain unchanged, it seems that it's not moving towards the surface at all, but circling around the surface. So, either the df is false, or the gradients are false, I think. And when I load the model from cars to continue training, it would gradually become similar to setting2's situation. Best, |

|

Hey Zhengdi, you can try if you can work with our trained model for scenes: Best, |

Hi Julian, Thank you for your model. I have tried this model today. Unfortunately, the problem still exists. The iteration takes much more times than car's model. And I found the performance is not even better than before. The inside is good, but there are too many false points outside and on the wall. Are you sure you are using the same setting in generation script? I use exactly the same script as cars to generate dense pcd for each cube, and then transform them into the world coordinate system. In open3d, It looks like this: The only difference between car and scenes is that we need to fuse the individual cubes into the complete scene after generation. But I think it's not a problem of the way I fuse the cubes. Because the performance using the car's model looks considerable (see my previous comment, the qualitative result). But using this model, I can't get a better result. This is how I transform each cube into the world coordinate system and stack them together. Just several lines of code referring to your scene_process.py: your scene_process.py: How I fuse individual cubes: I also tried to decrease the range here from [-1.5, 1.5] to [-1, 1](so * 2 -1), it'll help to eliminate some of the false points. But there are still many of them remaining: Lines 27 to 30 in 570d770

Best, |

|

Hey Julian, I found that the model your gave is recorded 150h and 108 epochs. I was wondering that how many epochs is for car and how many for scenes? It'll need ~100 hours to train 40 epochs on cars and ~200 hours to train 20 epochs on scenes. So it seems impossible in either way to train ~100 epochs in 150 hours. Could you tell me? Thank you! Best, |

|

Hi Zhengdi Thanks for pointing out the problems in the scenes processing. Both of them should be resolved now. Thanks |

|

Hey Aymen, Is there any other updates? I only found that you have updated the split file. But there is still no scripts for scene's training and testing Best, |

|

There are also some changes in the scene processing file. |

|

I can only see that you have eliminated the cubes when len(verts_inds) == 0. And some of the path issues. However, I don't think these changes will make a big difference to training and the performance. I still couldn't reproduce the performance on scenes with your provided model, your scripts. |

|

Have you tried warm-starting the model with a pre-trained model? |

Yes, I did. It's even worse than directly using a pre-trained model. The more trained on scenes, the worse the performance. I have no idea if that's because I was using igl to compute udf. |

Is it due to wrong df computed by np.abs(igl.signed_distance())? I saw another issue mentioned this can not be used to compute df for open surface. But I think you have also used this function on cars' open surface. |

|

@ZhengdiYu have you had any luck resolving any of the issues you brought up in this thread? I am also trying to train and test NDF on Gibson scenes dataset. Would you be able to share the dataloader files and training configs that you used for Gibson scenes dataset? I have pre-processed the data using scene_process.py script in NDF-1 repository, and I'm trying to figure out the details on how I can get started with training the network on these cubes. Is it correct that we can only use batch size of 1 for the Gibson scenes dataset due to each cube having different number of points? Thanks! |

Hey Julian,

Thank you for your great work!

Cars:

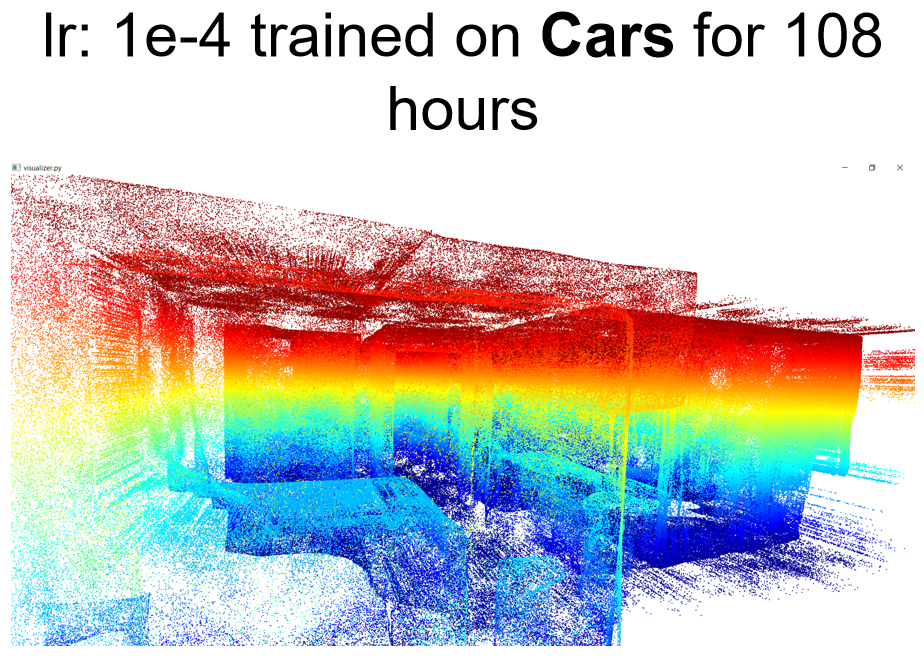

Previously, I have trained NDF on ShapeNet cars. And I found that the learning rate is 1e-6 in your code. In this case the convergence would be affected. It's too slow and the performance is not good. So I alter the learning rate to 1e-4, and that worked out as well as the pre-trained model.

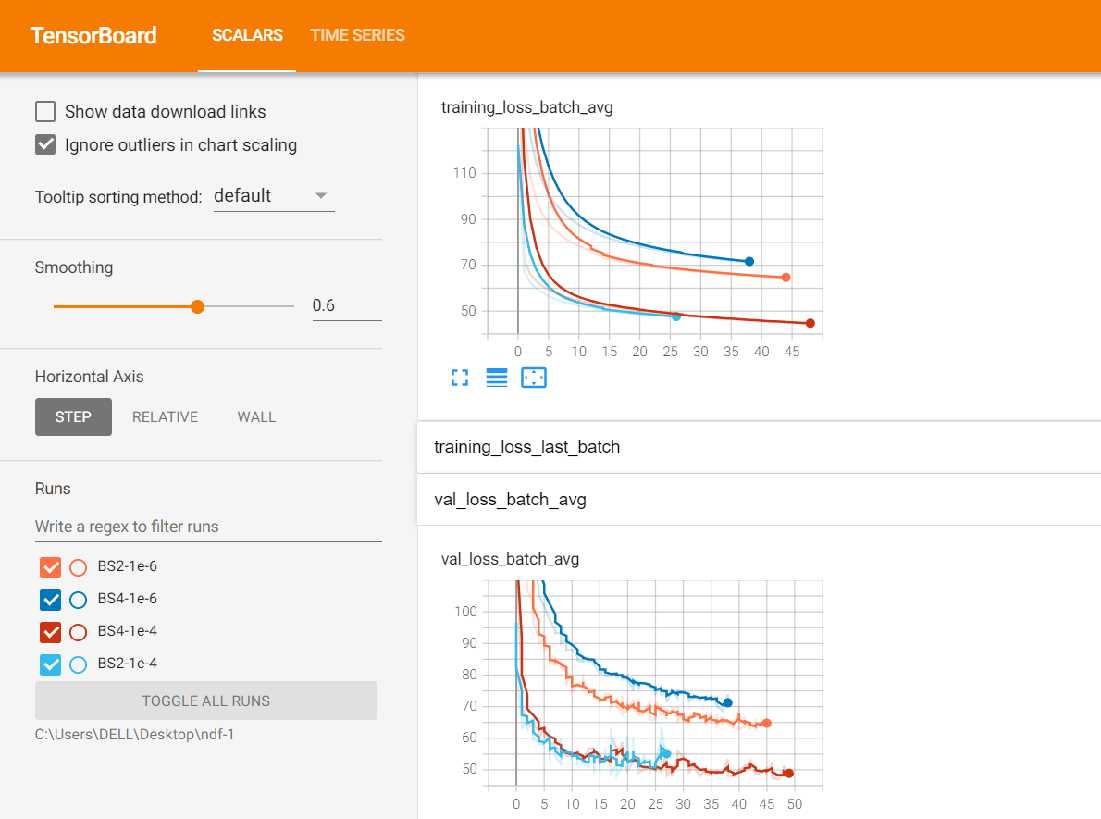

Also the batch_size and the initialization will not affect the performance. Here are the loss value for different BS and lr:

Scenes:

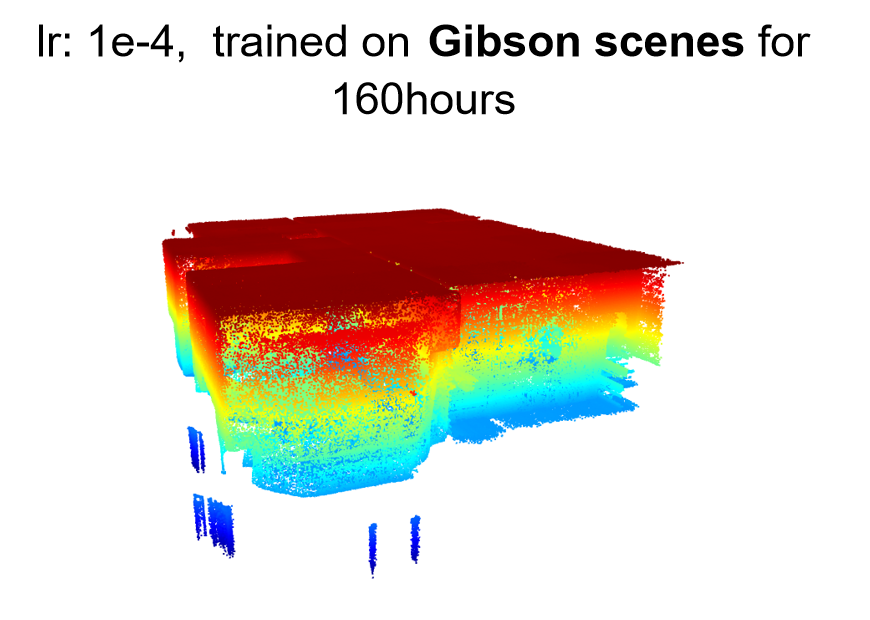

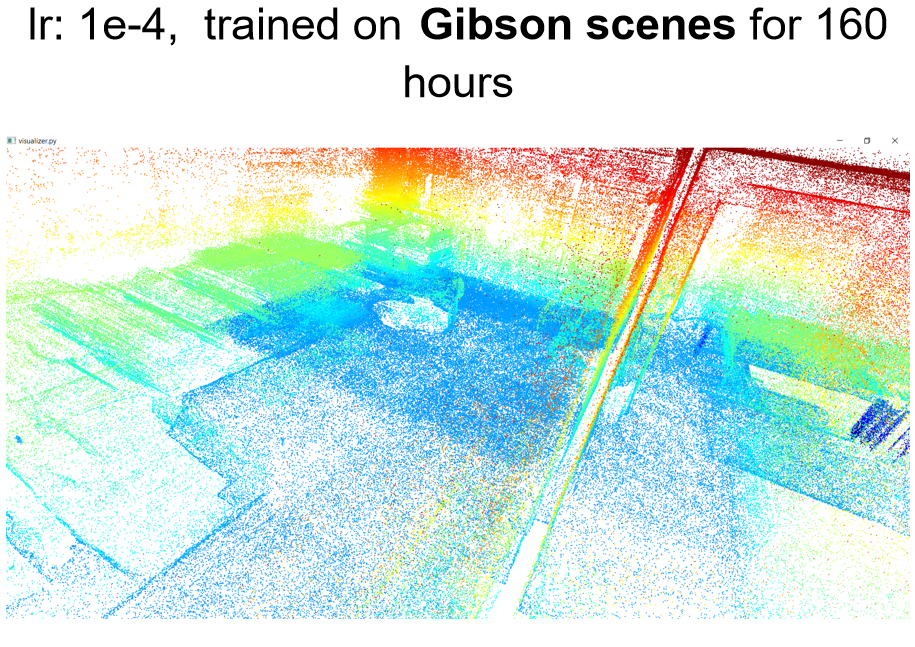

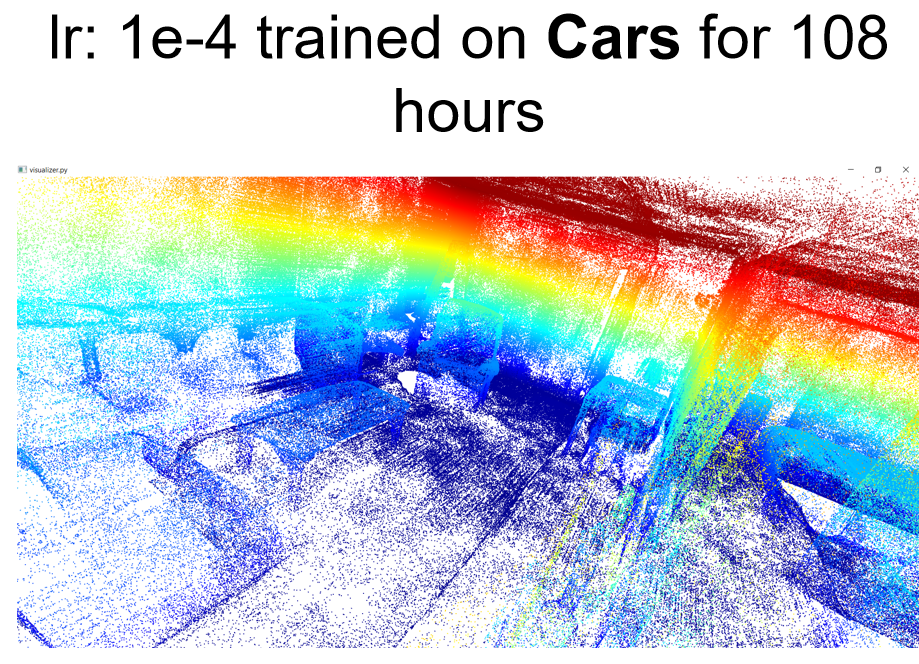

I also tried to use this learning rate 1e-4 for scenes. Looking at the loss value. It's also much better than before. However, the loss value is simply 3~4 times bigger than the loss of cars. I also tried to use your model pre-trained on cars to directly apply to scenes to generalize and it's even better than training on scenes. Please look at the example below:

Loss:

My training settings: Trained for 160 hours, batchsize1 (I think we can only use 1 because the point number in each cube is different), lr 1e-4. Other arguments remaining the same as cars(e.g. threshold). For preprocessing I was just using the script you released in NDF-1 repo and got many cubes for each scene. During training I just adapt the dataloader from Cars, and feed the split cubes into the network one by one (batchsize1), so the steps number in each epoch is the total cubes' number. For cars, the boundary sampled points number fed into the network is 50000, which is a half of the boundary sampled points(100000 sampled from mesh). So I also use 2 strategies to train on scenes, one is using all points in the cube as boundary points input(decoder input), the other one is using a half of them, but both of them did not work well:

Qualitative results:

Questions:

Q1. Do you remember how long it would take to train on scenes? Could you provide a pre-trained model if possible?

Q2. When do you plan to release the code for scenes? Or could you give us a brief introduction or guide line of how to train and test on scenes?

Q3. Have you changed arguments (e.g. thresholds) for training and testing on scenes (e.g. filter_val)? How do you train on scenes? Do you use a batch size 1 using the pre-processed data? Is there any other difference between the training on cars and scenes? (e.g. sample number?)

Q4. In Supplementary, What does this sentence in section 1 Hyperparameters - Network training part: 'To speed up training we initialized all networks with parameters gained by training on the full ShapeNet' means?

(updates: I found that during generation of each cubes' dense point cloud, the gradients produced by the model trained on scenes is not as precise as the one trained on cars. Though, for scenes' model, the df is generally smaller (more points with df < 0.03) at the beginning, after 7 refinement steps, only a small amount of them would have smaller df than before resulting in less points with df<0.009, so the generation will take much more steps and time, which I think is due to the inaccurate gradients because after we move the points along the gradients, the df should be smaller. On the other hand, the cars' model have less points with df<0.03 to begin with, but after 7 refinement steps, almost all of them can be correctly moved closer to the surface, resulting in more points with df<0.009.). I think something's gone wrong during training, it doesn't make sense. The loss is smaller, but the gradient is somehow more inaccurate. It seems like the ground truth might be incorrect, but the only adaption I made is switch pymesh to igl to compute gt_df. That's all.

Q5. Do you use different dense pc generation strategies for cars and scenes? Do you use the same script for both of them?

My method: I generate the scenes by first generating each cube using the same generation script(only decrease sample_num for each cube to ~50000) as cars and then based on the cube corner to stack them together.

However, The iteration for generating the dense point cloud sometimes will be infinite, because after each iteration no new points would fall within the 'filter_val' threshold (you keep those points with df < filter_val after each iteration) So I tried to increase the sample_num for the iteration to 200000 to increase the possibility of selecting correct points, but it did not improve the performance but only to some extent avoid the infinite loop described.

I think there might be 2 reasons:

Hope you can help me, thanks for your time!

Best,

Zhengdi

The text was updated successfully, but these errors were encountered: