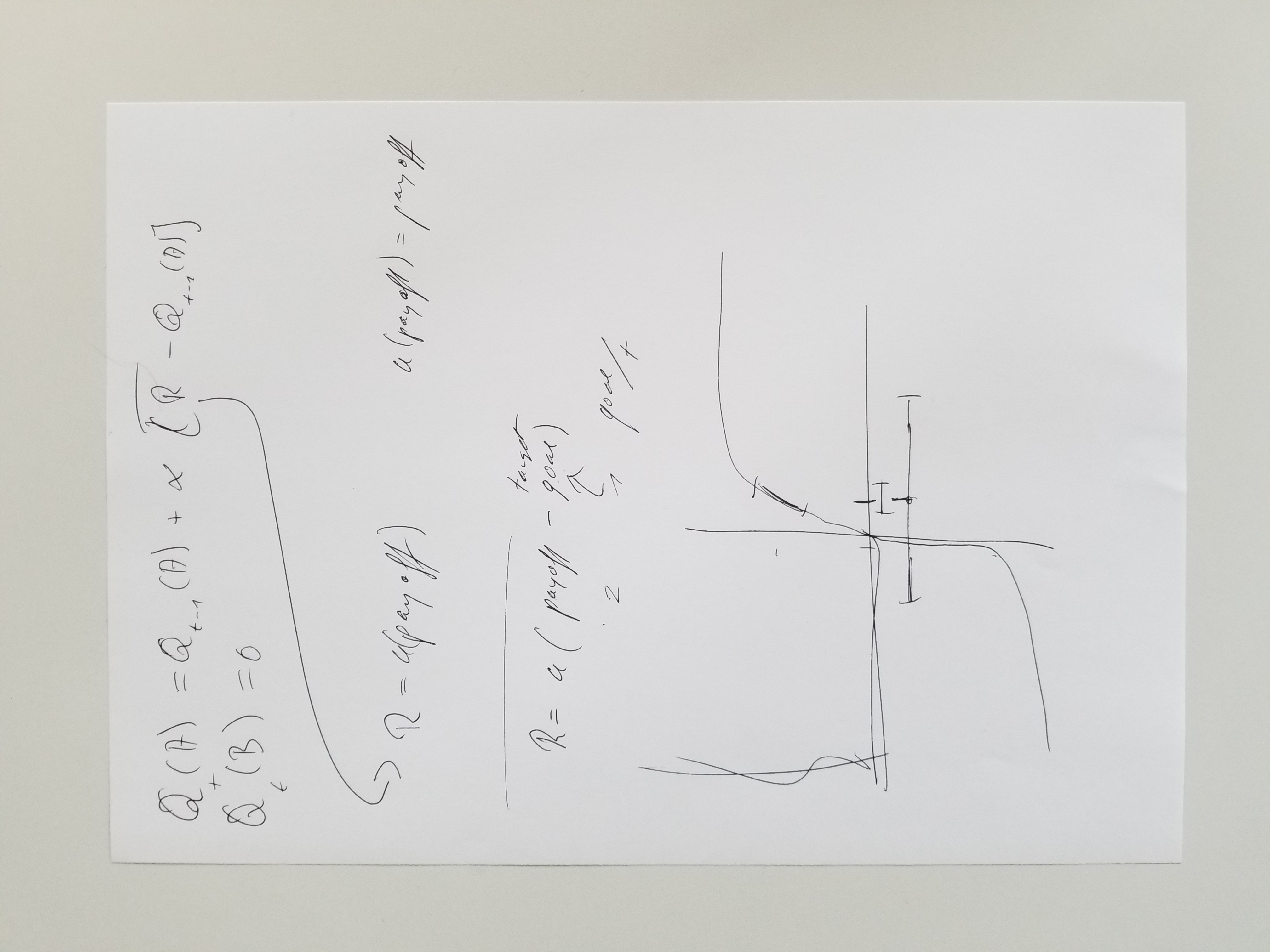

This model is a hack of reinforcement learning to try and take goals into account.

Basically, the idea is to change the observed outcome with the difference between the observed outcome, and the amount you'd need to achieve the goal. E.g.; if you have 10 trials and need 20 points, then you need 2 points on average to reach the goal. If the next outcome is 3, then this looks positive, while if it is 1, it looks negative.