- Pre 1940s: Many of the major mathematical concepts of modern machine learning came from statistics. Few of those major breakthroughs include Bayes Theorem(1812), Least Square Method(1805), Markov Chain(1913). These techniques are all fundamental to modern machine learning.

- AI Debuts on the Silver Screen(1927): The sci-fi film Metropolis set in 2026 Berlin, introduced the audiences the idea thinking machine. The character False Maria was the first robot ever depicted in a film.

- The Turing Test(1950): Alan Turing an English Mathematician considered as the father of artificial intelligence, created the Turing Test to determine if a computer has real intelligence. To pass the test, a computer must be able to fool a human into believing if it is also human.

- First Computer Learning Program(1952): Machine learning pioneer Arthur Samuel created a program that helped an IBM computer get better at checkers the more it played. Machine learning scientists often use board games because they are both understandable and complex.

- The Perceptron(1957): Frank Rosenblatt – at the Cornell Aeronautical Laboratory – combined Donald Hebb’s model of brain cell interaction with Arthur Samuel’s Machine Learning efforts and created the perceptron. The perceptron was initially planned as a machine, not a program. The software, originally designed for the IBM 704, was installed in a custom-built machine called the Mark 1 perceptron, which had been constructed for image recognition.

Although the perceptron seemed promising, it could not recognize many kinds of visual patterns (such as faces), causing frustration and stalling neural network research. It would be several years before the frustrations of investors and funding agencies faded. Neural network/Machine Learning research struggled until a resurgence during the 1990s.

- The years of the 1970s and early 1980s: In the late 1970s and early 1980s, neural network research was abandoned by computer science and AI researchers. The Machine Learning industry, which included a large number of researchers and technicians, was reorganized into a separate field and struggled for nearly a decade. The industry goal shifted from training for Artificial Intelligence to solving practical problems in terms of providing services. Its focus shifted from the approaches inherited from AI research to methods and tactics used in probability theory and statistics. During this time, the ML industry maintained its focus on neural networks and then flourished in the 1990s. Most of this success was a result of Internet growth, benefiting from the ever-growing availability of digital data and the ability to share its services by way of the Internet.

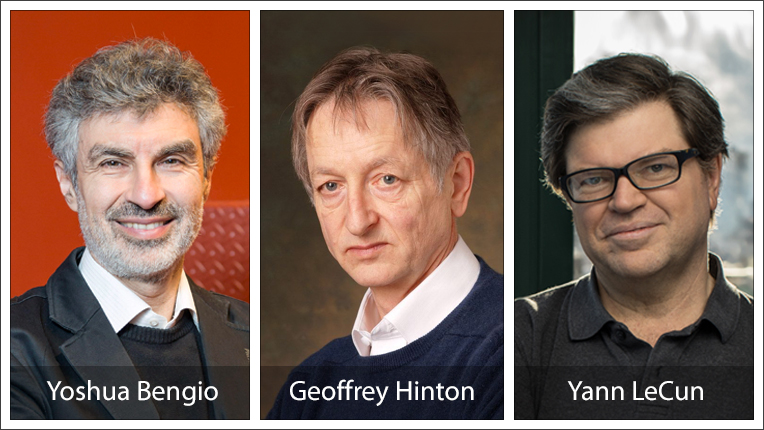

- Back Propagation(1986): Though Back Propagation was derived by multiple researchers in the early 60s. However it was until 1986, with the publishing of a paper by Rumelhart, Hinton, and Williams, titled "Learning Representations by Back-Propagating Errors," that the importance of the algorithm was appreciated by the machine learning community at large. Yann LeCun, the inventor of the Convolutional Neural Network architecture, proposed the modern form of the back-propagation learning algorithm for neural networks in his Ph.D. thesis in 1987. But it is only much later, in 1993, that Wan was able to win an international pattern recognition contest through backpropagation

By the 1980s, hand-engineering features had become the de facto standard in many fields, especially in computer vision, since experts knew from experiments which feature (e.g. lines, circles, edges, blobs in computer vision) made learning simpler. However, hand-engineering successful features require a lot of knowledge and practice. More importantly, since it is not automatic, it is usually very slow. Backpropagation was one of the first methods able to demonstrate that artificial neural networks could learn good internal representations, i.e. their hidden layers learned nontrivial features. Even more importantly, because of the efficiency of the algorithm and the fact that domain experts were no longer required to discover appropriate features, backpropagation allowed artificial neural networks to be applied to a much wider field of problems that were previously off-limits due to time and cost constraints.

- Machine Learning Applications (the 1990s): Work on machine learning shifts from a knowledge-driven approach to a data-driven approach. Scientists begin creating programs for computers to analyze large amounts of data and draw conclusions — or “learn” — from the results. we began to apply machine learning in data mining, adaptive software and web applications, text learning, and language learning. Scientists begin creating programs for computers to analyze large amounts of data and draw conclusions — or “learn” — from the results.

- Deep Blue Beats Garry Kasparov(1996): Public awareness of AI increased greatly when an IBM computer named Deep Blue beat world chess champion Garry Kasparov in the first game of a match. Kasparov won the 1996 match, but in 1997 an upgraded Deep Blue then won a second match 3½ games to 2½. Although Deep Blue played an impressive game of chess it largely relied on brute computing power to achieve this, including 480 special purposes ‘chess chips’. It worked by searching from 6-20 moves ahead at each position, having learned by evaluating thousands of old chess games to determine the path to checkmate.

- Neural Net research gets a reboot as "Deep Learning"(2006): Back in the early '80s when Hinton and his colleagues first started work on this idea, computers weren’t fast or powerful enough to process the enormous collections of data that neural nets require. Their success was limited, and the AI community turned its back on them, working to find shortcuts to brain-like behavior rather than trying to mimic the operation of the brain.

But a few resolute researchers carried on. According to Hinton and LeCun, it was rough going. Even as late as 2004 – more than 20 years after Hinton and LeCun first developed the "back-propagation" algorithms that seeded their work on neural networks – the rest of the academic world was largely uninterested.

But that year, with a small amount of funding from the Canadian Institute for Advanced Research (CIFAR) and the backing of LeCun and Bengio, Hinton founded the Neural Computation and Adaptive Perception program, an invite-only group of computer scientists, biologists, electrical engineers, neuroscientists, physicists, and psychologists.

By then, they had the computing power they needed to realize many of their earlier ideas. As they came together for regular workshops, their research accelerated. They built more powerful deep learning algorithms that operated on much larger datasets. By the middle of the decade, they were winning global AI competitions. And by the beginning of the current decade, the giants of the web began to notice.

- The AlexNet and use of GPU in Machine Learning (2012): In 2012, Alex Krizhevsky released AlexNet which was a deeper and much wider version of the LeNet and won by a large margin the difficult ImageNet competition. AlexNet is considered one of the most influential papers published in computer vision, has spurred many more papers published employing CNNs and GPUs to accelerate deep learning.

- GoogleBrain (2012): This was a deep neural network created by Jeff Dean of Google, which focused on pattern detection in images and videos. It was able to use Google’s resources, which made it incomparable to much smaller neural networks. It was later used to detect objects in YouTube videos. YouTube Link

- DeepFace (2014): This is a Deep Neural Network created by Facebook, which they claimed can recognize people with the same precision as a human can.

- DeepMind (2014): This company was bought by Google, and can play basic video games to the same levels as humans. In 2016, it managed to beat a professional at the game Go, which is considered to be one of the world’s most difficult board games. YouTube Link

- OpenAI (2015): This is a non-profit organization created by Elon Musk and others, to create safe artificial intelligence that can benefit humanity. OpenAI Five is the first AI to beat the world champions in an esports game after defeating the reigning Dota 2 world champions, OG, at the OpenAI Five Finals on April 13, 2019. OpenAI Five plays 180 years' worth of games against itself every day, learning via self-play.

- Amazon Machine Learning Platform (2015): This is part of Amazon Web Services and shows how most big companies want to get involved in machine learning. They say it drives many of their internal systems, from regularly used services such as search recommendations and Alexa to more experimental ones like Prime Air and Amazon Go.

- YOLO - You Only Look Once: Unified, Real-Time Object Detection (2016): Official Link

- General Adversarial Network (2014): Way back in 2014, Ian Goodfellow proposed a revolutionary idea — make two neural networks compete (or collaborate, it’s a matter of perspective) with each other.

One neural network tries to generate realistic data (note that GANs can be used to model any data distribution, but are mainly used for images these days), and the other network tries to discriminate between real data and data generated by the generator network.

Yann LeCun, Facebook’s chief AI scientist, has called GANs “the coolest idea in deep learning in the last 20 years.”

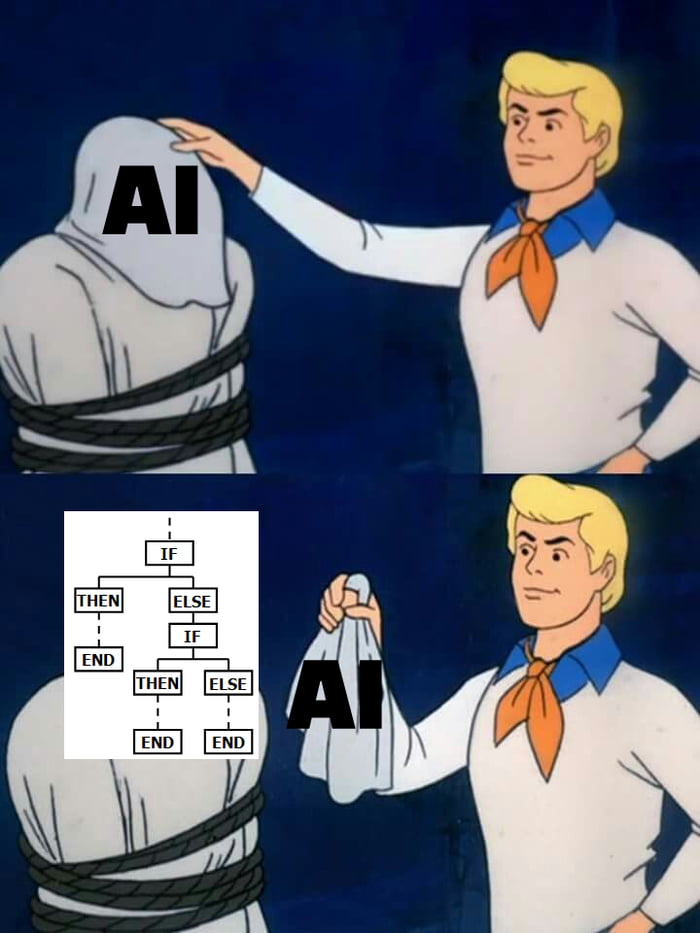

Using millions of if-else statements in your code means you’re already aware of those if-else conditions and you’re writing a program to tackle the situation.

AI on the other hand in simple words means a program taking care of the other scenarios too which it is NOT programmed for. That means the program learns with new conditions around the environment and evolves accordingly.

Okay time for something serious. Let's take a look at a few amazing scenarios where people are using ML/AI to solve real-world problems

- AI can make realistic phone calls: Last year during the Google I/O event google demonstrated their Duplex App, which successfully made a call with a hair salon and booked an appointment. The lady at the hair salon had no clue that she was talking to a computer.

- AI can find missing children: Nearly 3,000 missing children have been traced in four days, thanks to the facial recognition system (FRS) software that the Delhi Police is using on a trial basis to track down such children.

- AI can look through walls: MIT recently announced that they had built an AI system that could literally ‘see’ people moving behind walls. They hooked up a neural network to a WIFI antenna and trained it to recognize the radio signals being bounced off people’s bodies. Over time, the network learned to recognize people and track their movements exactly, even in the dark or when they move behind walls.

- AI can diagnosis disease just by looking at your eyes: To diagnose eye diseases, doctors often use an OCT scan. This is a scan where a beam of infrared light is bounced off the interior surfaces of the eye, to create a 3D scan of the eye tissues. Google’s DeepMind division trained a neural network on a database of OCT scans and then let it compete with a panel of doctors. The AI managed to match the doctor’s diagnosis in 94% of all cases. This is an astounding result. Six percent more and the AI would have been exactly as accurate as a panel of human doctors.

- AI can turn Doodles into Stunning, Photorealistic Landscapes: A deep learning model developed by NVIDIA Research can turn rough doodles into photorealistic masterpieces with breathtaking ease. The tool leverages generative adversarial networks, or GANs, to convert segmentation maps into lifelike images.

- AI is helping in traffic control: Together Artificial Intelligence (AI) and drones are becoming the smart eyes of smart cities with AI now helping to interpret and understand drone footage. Our work in the area is arming the Department of Transport's Road and Traffic Design team with essential data including vehicle type, speed and traffic flow at some of Melbourne’s busiest roundabouts. They are using the information to improve traffic modeling and understand the different behaviors of drivers at roundabouts.

We are going to discuss the theoretical concepts and the coding parts. You don't have to have a solid background in mathematics and python coding. Interested people can go through topics like linear algebra, probability, statistical concepts, python introduction, etc. great source

These sessions are designed to get you up and running with the ml/deep learning concepts. The following are topics we will cover in detail.

-

Introduction to Machine Learning

- Linear Regression

- Logistic Regression

- K-Means Clustering

- Perceptron and Neural Networks

-

Deep Learning

- Backpropagation algorithm

- Convolutional neural network

- Simple classification on MNIST Dataset using CNN and then some real-life classification problems

- FACENet, for Face Recognition

Depending on the response we get from you guys we will add more advanced topics like:

- How do you actually write a CNN from scratch

- How to train segmentation , object detection models(models like YOLO, Retinanet, Deeplab)

- Generative adversarial networks(GANS)

- Create a public Git repository named "PIKTORML" to which add a folder named "Assignment_0"

- Add Assignment_0.md file to this folder (markdown tutorial link)

- Answer the following Questions in the 'md' file created

- What is the definition of Machine Learning according to Arthur Samuel ? ( Hint : Use Internet) ---> 10points

- What is the definition of Machine Learning according to Tom Mitchell ? ( Hint : Use Internet) ---> 20points

- Using an example of machine learning problem (Anything that you can pose) explain it using the terms in Question2 in your own words(30 points)

- Push your changes to Git

- Submit the "Assignment_0" online folder link to Canvas Instructure 'Submit Assignment' link

:format(webp)/cdn.vox-cdn.com/uploads/chorus_image/image/60819361/Close_up_of_an_OCT_machine_in_use_by_a_technician_performing_a_scan__1_.0.jpg)