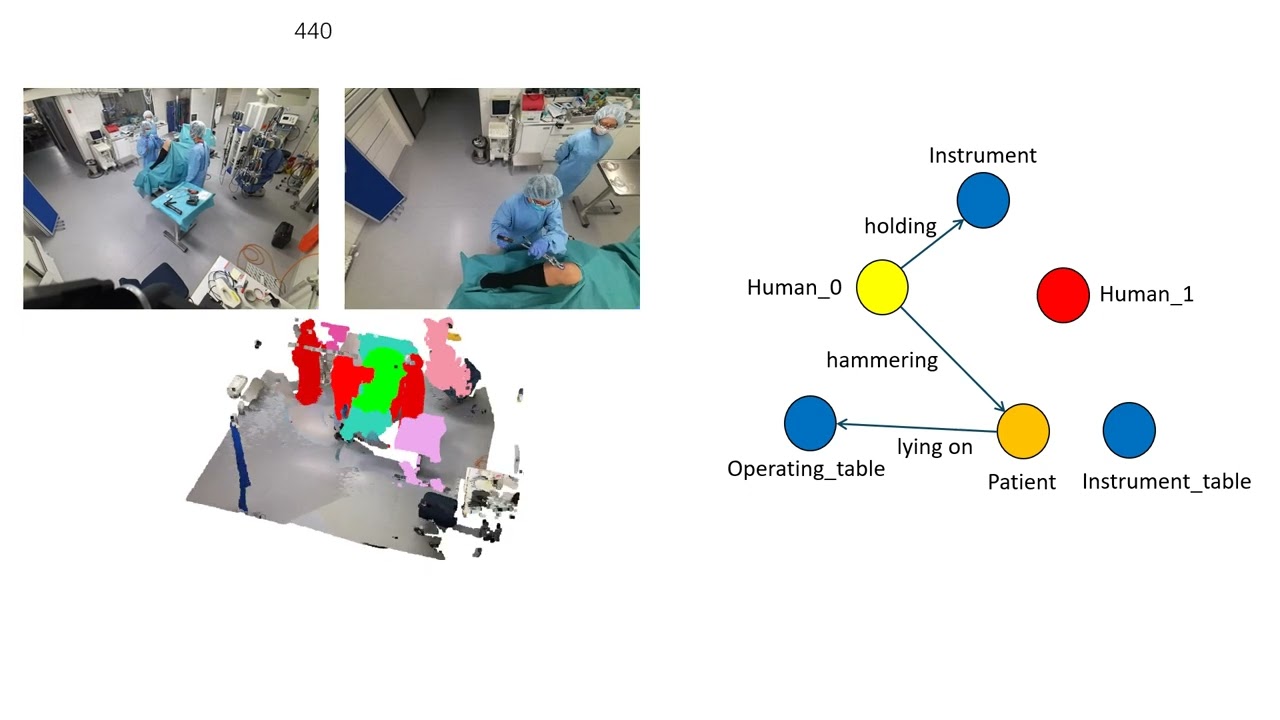

Tri-modal Confluence with Temporal Dynamics for Scene Graph Generation in Operating Rooms (MICCAI 2024)

Authors: Diandian Guo, Manxi Lin, Jialun Pei, He Tang, Pheng-Ann Heng.

The code is tested on CUDA 11.1 and pytorch 1.9.0, change the versions below to your desired ones.

conda create -n tritempor python=3.7

conda install pytorch==1.9.0 torchvision cudatoolkit=11.1 -c pytorch -c nvidia -y

conda install cython scipy

pip install pycocotools

pip install opencv-pythonOpen3d is required for pointnet++. Please following the installation steps below to install open3d.

export CUDA=11.1

pip install torch-scatter -f https://pytorch-geometric.com/whl/torch-1.9.0+${CUDA}.html

pip install torch-sparse -f https://pytorch-geometric.com/whl/torch-1.9.0+${CUDA}.html

pip install torch-cluster -f https://pytorch-geometric.com/whl/torch-1.9.0+${CUDA}.html

pip install torch-spline-conv -f https://pytorch-geometric.com/whl/torch-1.9.0+${CUDA}.html

pip install torch-geometric

pip install open3dThen refer to https://github.com/erikwijmans/Pointnet2_PyTorch/tree/master for Pointnet++ installation. If you have any problems, feel free to contact us!

-

Raw 4D-OR: https://forms.gle/9cR3H5KcFUr5VKxr9

-

Processed 4D-OR: OneDrive

Download the Processed 4D-OR provided above. The data folder should be like this:

TriTemp-OR/data/:

/images/: unzip 4d_or_images_multiview_reltrformat.zip

/points/: unzip points.zip

/infer/: unzip infer.zip

/train.json: from reltr_annotations_8.3.zip

/val.json: from reltr_annotations_8.3.zip

/test.json: from reltr_annotations_8.3.zip

/rel.json: from reltr_annotations_8.3.zipPretrained DETR weights: OneDrive.

python -m torch.distributed.launch --nproc_per_node={num_gpus} --use_env main.py --validate \

--num_hoi_queries 100 --batch_size 2 --lr 5e-5 --hoi_aux_loss --dataset_file or \

--detr_weights {pretrained DETR path} --output_dir {output_path} --group_name {output_group_name} \

--HOIDet --run_name {output_run_name} --epochs 100 --ann_path /data/4dor/ --img_folder /data/4dor/images \

--num_queries 20 --use_tricks_val --use_relation_tgt_mask --add_none --train_detr --use_pointsfusion \

--use_multiview_fusion --use_multiviewfusion_last_view2python -m torch.distributed.launch --nproc_per_node={num_gpus} --use_env main.py --validate \

--num_hoi_queries 100 --batch_size 2 --lr 5e-5 --hoi_aux_loss --dataset_file or \

--detr_weights {pretrained DETR path} --output_dir {output_path} --group_name {output_group_name} \

--HOIDet --run_name {output_run_name} --epochs 100 --ann_path /data/4dor/ --img_folder /data/4dor/images \

--num_queries 20 --use_tricks_val --use_relation_tgt_mask --add_none --use_pointsfusion \

--use_multiview_fusion --use_multiviewfusion_last_view2 --resume {MODEL_WEIGHTS} --inferPlease replace {MODEL_WEIGHTS} to the pre-trained weights

This work is based on:

Thanks for their great work!

If this helps you, please cite this work:

@inproceedings{guo2024tri,

title={Tri-modal Confluence with Temporal Dynamics for Scene Graph Generation in Operating Rooms},

author={Guo, Diandian and Lin, Manxi and Pei, Jialun and Tang, He and Jin, Yueming and Heng, Pheng-Ann},

booktitle={MICCAI},

year={2024},

organization={Springer}

}