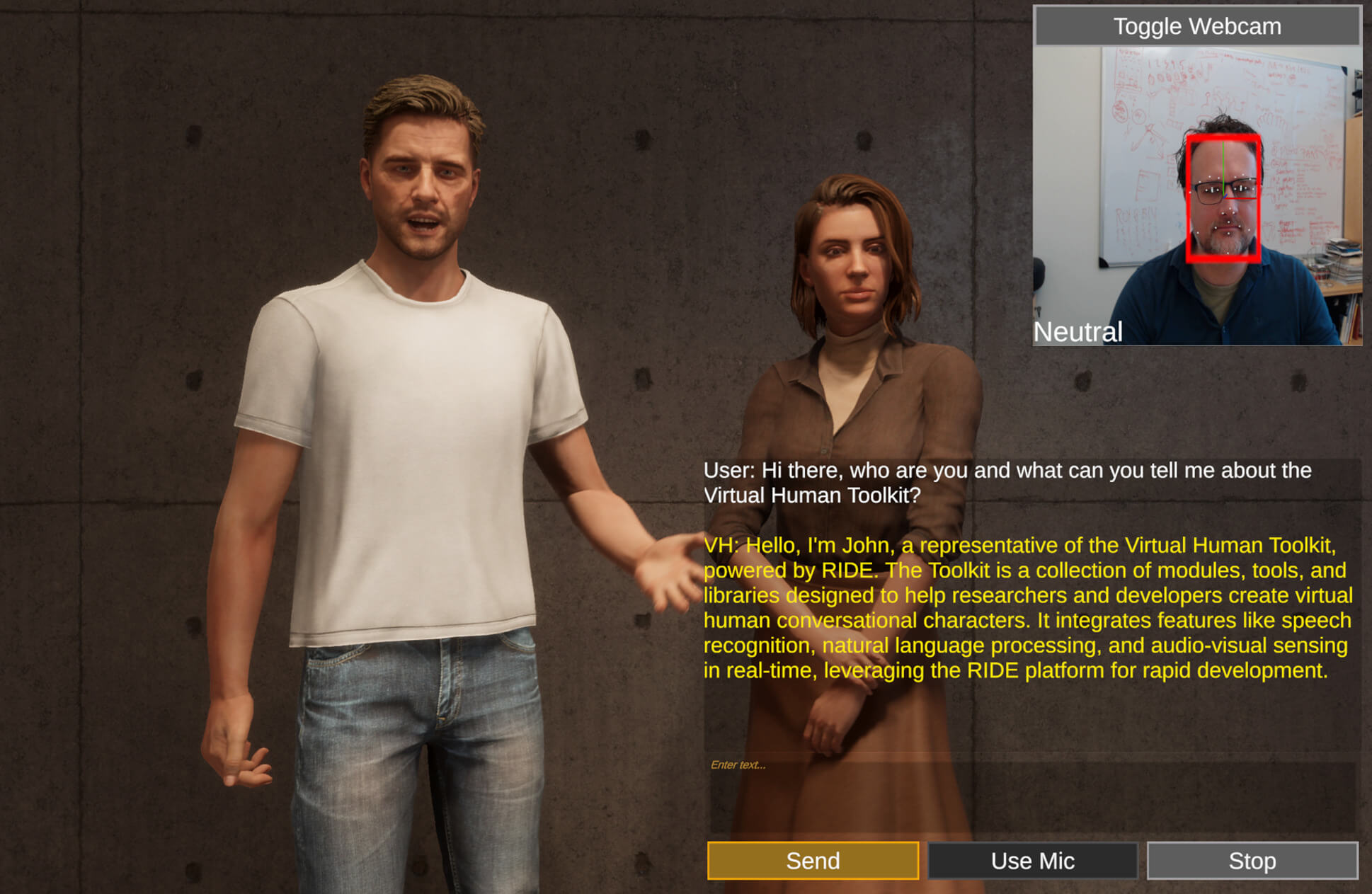

The Virtual Human Toolkit (VHToolkit) is a research and development platform for the creation of virtual humans, also called embodied conversational agents or socially intelligent agents. The VHToolkit enables the creation and deployment of real-time interactive characters that perceive end-users and respond both verbally and nonverbally.

The VHToolkit has the following features:

- Integrated framework; the VHToolkit combines audio-visual sensing and speech recognition with natural language processing, text-to-speech synthesis, and nonverbal behavior generation within a single framework.

- Flexible architecture; select from multiple technology vendors or open source solutions as well as cloud services or local technologies (e.g., OpenAI ChatGPT, Anthropic Claude, AWS Lex V2, or RASA).

- Extendable API; add your own technology by implementing the principled API.

- Multi-platform support; the VHToolkit supports Windows, MacOS, Linux, Android, iOS, and AR/VR.

- Custom character creation; create your own character with Character Creator and import into the VHToolkit to make it interactive. Requires a separate license.

- Personalized avatar creation; use Reallusion’s Character Creator and Headshot to create avatars based on real people. Optionally clone their voice with ElevenLabs. Requires separate licenses.

The VHToolkit is powered by the Rapid Integration & Development Environment platform (RIDE) and targets game engine Unity.

Detailed documentation can be found at this GitHub's Wiki section.

The VHToolkit is licensed under the USC-RL v3.0 license, a permissive license for academic and personal use. For commercial and government purpose use, please contact us.

When publishing work that uses the VHToolkit, please cite one of the following papers:

@inproceedings{hartholt2013all,

title={All together now: Introducing the virtual human toolkit},

author={Hartholt, Arno and Traum, David and Marsella, Stacy C and Shapiro, Ari and Stratou, Giota and Leuski, Anton and Morency, Louis-Philippe and Gratch, Jonathan},

booktitle={International Workshop on Intelligent Virtual Agents},

pages={368--381},

year={2013},

organization={Springer}

}

@inproceedings{hartholt2022re,

title={Re-architecting the virtual human toolkit: towards an interoperable platform for embodied conversational agent research and development},

author={Hartholt, Arno and Fast, Ed and Li, Zongjian and Kim, Kevin and Leeds, Andrew and Mozgai, Sharon},

booktitle={Proceedings of the 22nd ACM International Conference on Intelligent Virtual Agents},

pages={1--8},

year={2022}

}