-

Notifications

You must be signed in to change notification settings - Fork 44

Fix directional light shadows by implementing get_frustum_corners properly #180

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Conversation

…perly XrProjection now stores the FOV parameters instead of the full projection matrix. Calculation of the projection matrix has been moved to camera.rs Fixes awtterpip#179

|

thanks for the pr but this approach sadly wouldn't work for a future WebXR impl since WebXR returns a whole projection matrix (for whatever reason). i would be open to merge this for the time being if there is no relatively easy way to do this while keeping the matrix construction in the backend |

Huh. Damnit. |

|

So according to this issue, there are real hardware reasons for this: immersive-web/webxr#461. I figured I might as well figure out the math for this properly and make it all work, but uhh... The thing that surprises me is that OpenXR doesn't seem to support this at all. I was completely unable to find anything in the specification for this, not even in any extensions. OpenVR however does have an API (seemingly). This means OpenXR literally can't correctly represent some headsets appropriately?? That can't be right, surely?? |

|

@PJB3005 I'm not sure what devices it's problematic for. Apart from the mentioned shear on older HoloLens matrices, I do see someone claiming it doesn't work with some devices, but they later retract that claim in another issue. However, WebXR did eventually resolve to allow matrices with shear, so yeah, we can't represent all allowed matrices with just an FoV. Though... if we can't handle shear correctly, we may just have to take this advice:

|

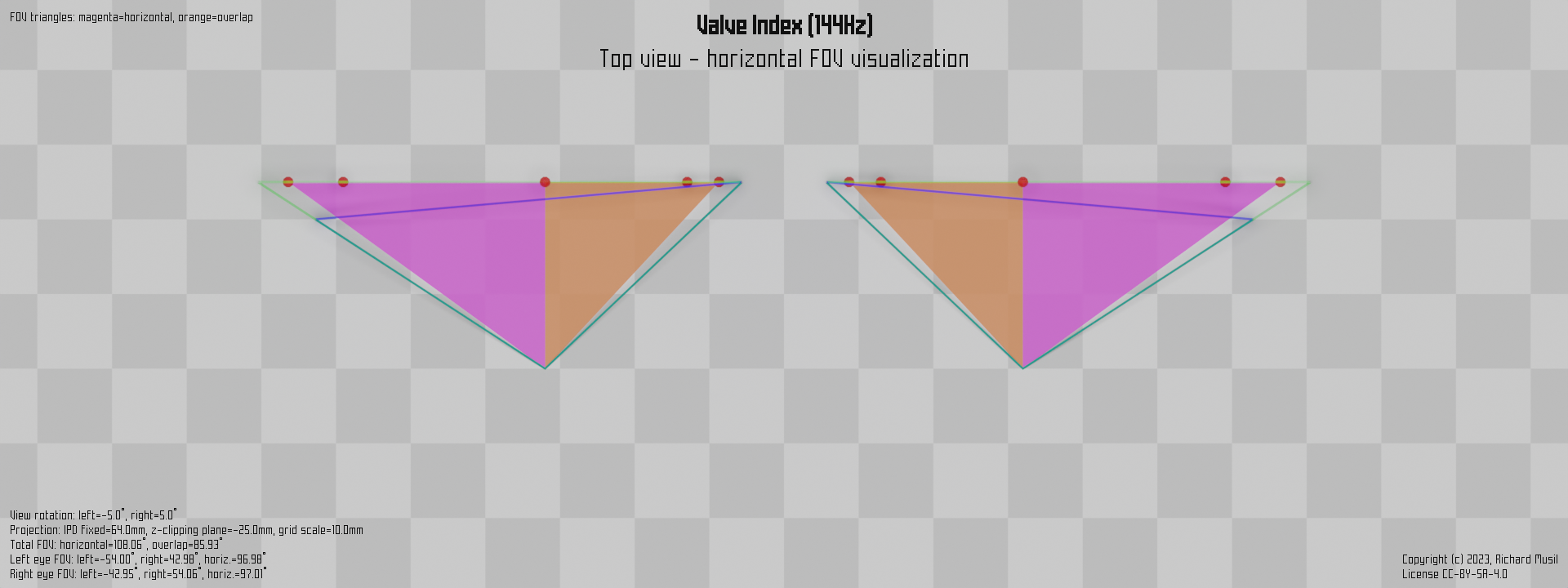

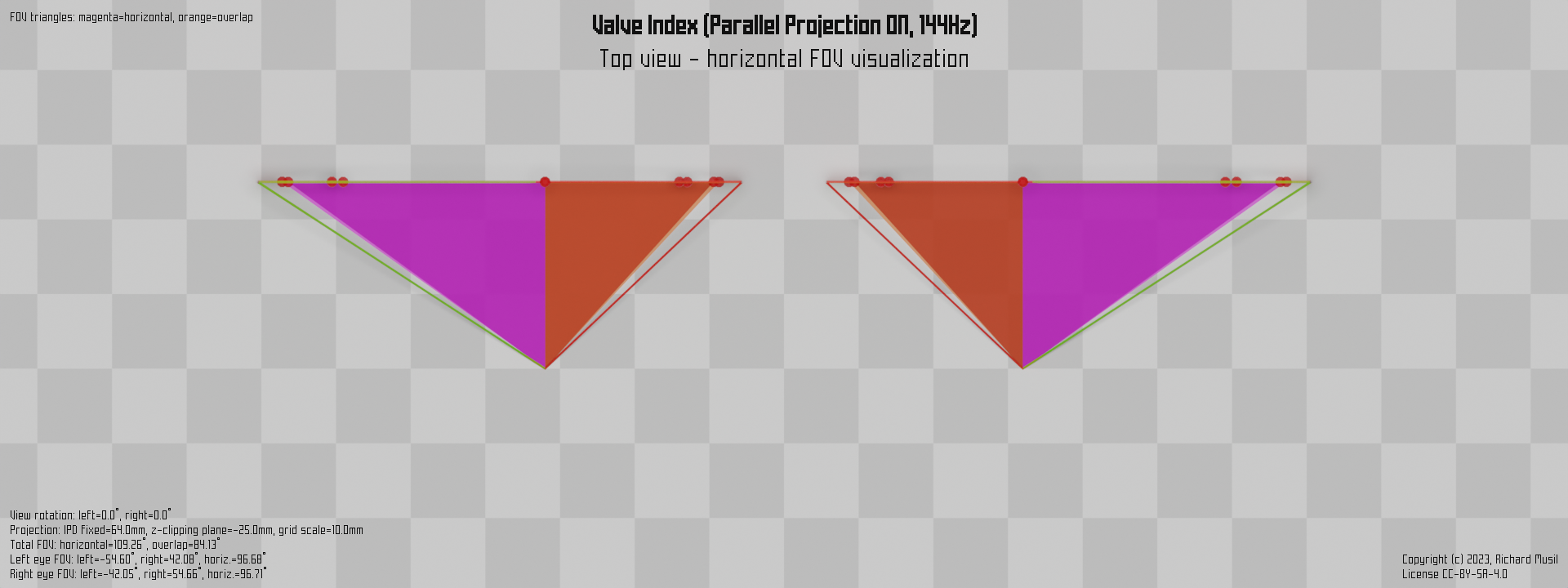

I'm doing a bunch of 4 AM research (most of which devolves into looking at forum threads from YEARS ago). According to this database, the Valve Index1 and Pimax (mentioned in the WebXR issue2) also have this sheared projection. If I'm not mixing things up here, another term for this is "canted lenses" or "non-parallel projection". If an app only supports "parallel" projection, the XR runtime can reproject these images from a larger image, but this results in wasted GPU power. Apparently on Pimax headsets with their harsh angle this is like 30%, which is absolutely significant. So ideally apps would support this. But this is where it gets stupid... I mentioned above that OpenVR has an API for getting a projection matrix. So it can at least theoretically work with these kinds of HMDs (I mean, I'd fucking hope so, it's Valve's own API and headset), furthermore the API allows specifying the projection matrix used when submitting an image (it's via C++ inheritance, yuck, I almost wrote that it can't here). However, there's some missing pieces:

This leaves me with two theories. I currently do not know which is true, I have more research to do tomorrow:

OpenXR supports these headsets properly in neither case which is just appalling. In the former case there's a plausible reason for this crate to also get OpenVR support, however if it's the latter case then it's unlikely to provide much benefit to users unless they have a Pimax. Footnotes |

|

And then, of course, immediately after I check the OpenVR commit history one last time and see this: Genuinely, no idea what this implies, and it shouldn't even be something we'd have to rely on since it's from 2023, which is way too late. |

|

I'm sorry, curse of sometimes doing this on mobile. Didn't mean to close the issue, and I wish I'd put more explanation in the above comment, because:

I am pretty sure this is incorrect. Canted (Valve Index) and rotated (Quest 3) displays can easily be represented by a pose for each eye, plus up/down/left/right bounds on the FoV that are allowed to be non-symmetrical. The reason canting was a problem was partly due to driver support making assumptions about the view matrices, which was fixed with later APIs. I believe more advanced configurations like the rotation on the Quest 3 also pose challenges for things like combined frustum culling, since naïvely combining the frustum in either direction is probably neither efficient nor symmetrical.) My understanding is that shear refers to including a shear in the view matrix directly, i.e. making the view plane a parallelogram, which I don't think is possible to emulate accurately with an off-axis matrix due to perspective effects, but it's bending my brain a little to even try. I'm only half-awake right now but I'd be happy to go over this much later today, ideally in a more synchronous manner initially (maybe on the discord?) but I do think it's valuable to synthesise that back here in the interests of the open web. (edit: typo) |

|

Alright, so. I think I got it all noted down in my head. The Index and Pimax' canted lenses still have normal perspective projection matrices, but they are rotated. This means the planes aren't parallel to each other which can cause various shader issues even if the basic game rendering math does work. All of this can be described just fine with OpenXR, as the rotation is part of the pose returned along with the FOV parameters. The reason I got confused is likely because:

So all things considered, it's pretty simple, OpenXR supports it nicely, OpenVR is simple. Now about the SteamVR with Index: I think it's likely that Valve just didn't see value in the non-parallel-projection feature with all the risk of hard-to-QA-artifacts it causes for game devs. You used to be able to force the Index onto exposing the real projection matrices via a SteamVR config key, but from my reading this broke around the SteamVR 2.0 release in 2023 (this is related to the OpenVR property I linked earlier). It now seems to be solely controlled by the JSON configuration stored inside the Index. Pimax likely can still have it by configuring that separately, but Index is completely locked out. Out of curiosity, I did manage to re-enable it for one session by hacking SteamVR with a debugger, but obviously I can't recommend that for anyone. |

|

TL;DR "where does that leave this PR" No idea. Maybe the math isn't too hard to figure out in the general case, but otherwise it seems plausibly reasonable for us to just assume that sheared projection matrices don't actually exist in practice anymore. If they did, I'd expect there to at least be an OpenXR extension. |

|

could we move the matrix calculation back into bevy_mod_openxr and then add a new corners field to the XrProjection? that way we can figure out how to get the corners from the webxr matrix later |

No, because the corners need to be calculated based on the depth values specified in the cascading shadow config. I guess we could acquire that, but it'd likely be a bad idea. Regardless, my intuition says that it shouldn't be difficult to extend this math to the general case from an arbitrary projection matrix. The thing I'm just trying to figure out now is if "sheared projection matrices" even exist? After re-reading the WebXR thread armed with more knowledge, I came to the conclusion that half of it may be confusion/misinformation. One person says that "you can just put this in the view transformation" but the Magic Leap guy says "no seriously we need this" and never (publicly) elaborates why. Except I can find no definition for "sheared projection matrix" that seems to be anything other than an off-axis asymmetric projection matrix (which we already support for OpenXR). The only possible lead I have is that they mean a projection scenario where the screen isn't a rectangle for some reason (like a projector pointed at an angled wall), but I'm not actually sure. Magic Leap loudly supports OpenXR in their docs, and I still cannot see any extensions listed that would be necessary to support custom projection matrices that were claimed necessary in the WebXR thread. So uh, is this just all nonsense? I seriously don't know right now. |

|

Why is calculating the corners from a view projection wrong? And how does the shadow cascade configs apply to this? |

Because we don't know what kind of near/far values the cascading shadow code is gonna request while we're caching them into the |

|

I would agree that giving a projection matrix is likely unnecessary and worse than just poses and fov, but sadly webxr exists and returns a matrix. I feel like if we merge this in the current state, once we impl webxr we either have to calculate the fov values from a matrix or we revert most of this pr. My entire reasoning is that WebXR made the (imo bad) choice of only providing a matrix, other than that i have no issue |

Given that I still can't figure out what "Sheared projection matrix" would actually imply in WebXR terms, I don't think we could even test and make sure it works properly for the rest of the engine/library. So I agree with the former, assuming decomposing the matrix isn't too hard? If we do want to "handle it properly", I don't think the math is that hard though1, so I could probably do it if we want. Footnotes

|

|

If i understand correctly we use an infinite far plane, so i would expect the mapping to be nonlinear, but i could also be completely wrong i have pretty much zero experience with these kind of things |

|

It is non linear, but I'm not sure how our projection matrix breaks shadows. Does a normal asymmetrical projection break them? |

oh I don't have much experience either, don't worry. On the infinite far plane issue: uhhh yeah that might be an issue, but wait, does WebXR even allow us to specify that? |

It has nothing to do with the projection matrix itself. The problem is that we are not using the |

Oh. Hmm, that sounds... very fixable? |

This PR aims to do the same as awtterpip#180, but hopefully without making future WebXR compat more annoying. get_frustum_corners() is now implemented properly, directly from the projection matrix. I believe it should work with the existing OpenXR code, but I cannot test this with any WebXR implementations with "sheared projection matrices", because I am continuing to doubt whether that even exists. I also added unit tests, which involved a decent amount of code shuffling regardless. The original implementation from awtterpip#180 is left in as a control in the test code.

|

a fix for this issue has been merged with #181 |

XrProjectionnow stores the FOV parameters instead of the full projection matrix. Calculation of the projection matrix has been moved to camera.rsFixes #179