Important

This package only works with self-hosted n8n installations. It is not compatible with n8n Cloud.

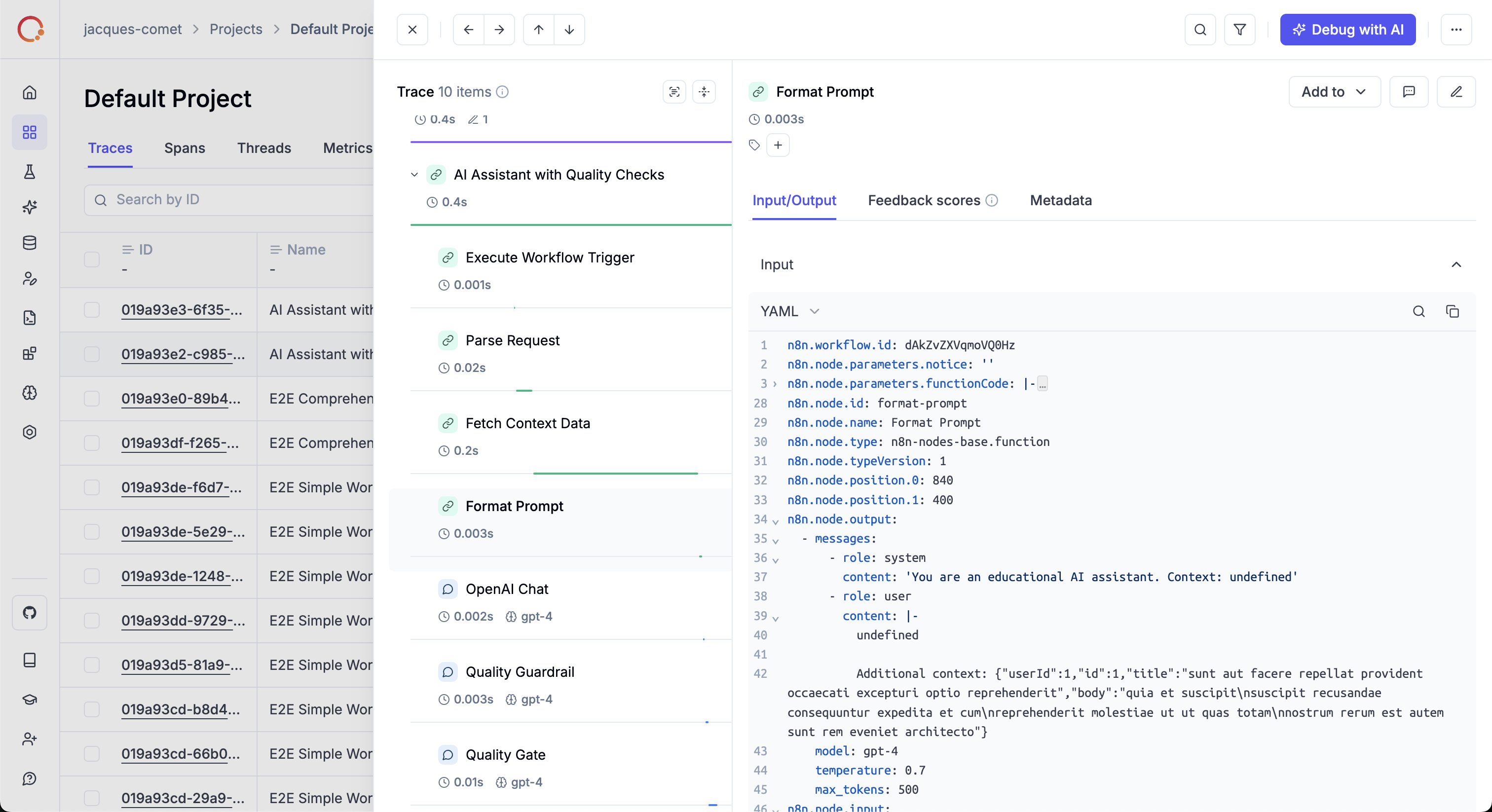

OpenTelemetry instrumentation for n8n workflows. Automatically traces workflow executions and node operations using the standard OpenTelemetry SDK.

- 🔍 Automatic tracing of workflow executions and individual node operations

- 📊 Standard OpenTelemetry instrumentation using the official Node.js SDK

- 🎯 Zero-code setup via n8n's hook system

- 🔌 OTLP compatible - works with any OpenTelemetry-compatible backend

- ⚙️ Configurable I/O capture, node filtering, and more

- 🚀 Works with Docker or bare metal

- 💻 Node.js ≥ 18

The fastest way to get started is with Docker Compose:

# Clone and navigate to the example

git clone https://github.com/comet-ml/n8n-observability.git

cd n8n-observability/examples/docker-compose

# Set your Opik API key (get one free at https://www.comet.com/signup)

export OPIK_API_KEY=your_api_key_here

# Build and run

docker-compose up --buildOpen http://localhost:5678, create a workflow, and see traces in your Comet ML dashboard!

📖 See examples/docker-compose/ for full documentation.

Create a custom Dockerfile that installs the package globally:

FROM n8nio/n8n:latest

USER root

RUN npm install -g n8n-observability

ENV EXTERNAL_HOOK_FILES=/usr/local/lib/node_modules/n8n-observability/dist/hooks.cjs

USER nodeThen run with your OTLP configuration:

# docker-compose.yml

services:

n8n:

build: .

environment:

# Comet ML / Opik

OTEL_EXPORTER_OTLP_ENDPOINT: "https://www.comet.com/opik/api/v1/private/otel"

OTEL_EXPORTER_OTLP_HEADERS: "Authorization=${OPIK_API_KEY},Comet-Workspace=default"

N8N_OTEL_SERVICE_NAME: "my-n8n"

volumes:

- n8n_data:/home/node/.n8n

ports:

- "5678:5678"

volumes:

n8n_data:# Install globally

npm install -g n8n-observability

# Configure OTLP endpoint (Comet ML example)

export OTEL_EXPORTER_OTLP_ENDPOINT=https://www.comet.com/opik/api/v1/private/otel

export OTEL_EXPORTER_OTLP_HEADERS='Authorization=<your-api-key>,Comet-Workspace=default'

export N8N_OTEL_SERVICE_NAME=my-n8n

export EXTERNAL_HOOK_FILES=$(npm root -g)/n8n-observability/dist/hooks.cjs

# Start n8n

n8n startimport { setupN8nObservability } from 'n8n-observability';

await setupN8nObservability({

serviceName: 'my-n8n',

debug: true,

});

// Then start n8n as usual| Variable | Purpose | Default |

|---|---|---|

OTEL_EXPORTER_OTLP_ENDPOINT |

OTLP exporter endpoint | — |

OTEL_EXPORTER_OTLP_HEADERS |

OTLP headers (e.g., auth tokens) | — |

N8N_OTEL_SERVICE_NAME |

Service name for telemetry | n8n |

N8N_OTEL_NODE_INCLUDE |

Only trace listed nodes (comma-separated) | — |

N8N_OTEL_NODE_EXCLUDE |

Exclude listed nodes (comma-separated) | — |

N8N_OTEL_CAPTURE_INPUT |

Capture node input data | true |

N8N_OTEL_CAPTURE_OUTPUT |

Capture node output data | true |

N8N_OTEL_AUTO_INSTRUMENT |

Enable HTTP/Express instrumentation | false |

N8N_OTEL_METRICS |

Enable metrics collection | false |

N8N_OTEL_DEBUG |

Enable debug logging | false |

EXTERNAL_HOOK_FILES |

Path to hooks.cjs (set automatically) | — |

# Only trace specific nodes

export N8N_OTEL_NODE_INCLUDE="OpenAI,HTTP Request"

# Exclude noisy nodes

export N8N_OTEL_NODE_EXCLUDE="Wait,Set"

# Disable I/O capture for privacy

export N8N_OTEL_CAPTURE_INPUT=false

export N8N_OTEL_CAPTURE_OUTPUT=falseWorks with any OpenTelemetry-compatible backend:

# Cloud

export OTEL_EXPORTER_OTLP_ENDPOINT=https://www.comet.com/opik/api/v1/private/otel

export OTEL_EXPORTER_OTLP_HEADERS='Authorization=<your-api-key>,Comet-Workspace=default'

# Self-hosted Opik

export OTEL_EXPORTER_OTLP_ENDPOINT=http://localhost:5173/api/v1/private/otel# Jaeger

export OTEL_EXPORTER_OTLP_ENDPOINT=http://jaeger:4318

# Grafana Tempo

export OTEL_EXPORTER_OTLP_ENDPOINT=http://tempo:4318

# Honeycomb

export OTEL_EXPORTER_OTLP_ENDPOINT=https://api.honeycomb.io

export OTEL_EXPORTER_OTLP_HEADERS='x-honeycomb-team=<api-key>'

# Generic OTLP collector

export OTEL_EXPORTER_OTLP_ENDPOINT=http://otel-collector:4318n8n.workflow.id- Workflow IDn8n.workflow.name- Workflow namen8n.span.type-"workflow"

n8n.node.type- Node type (e.g.,n8n-nodes-base.httpRequest)n8n.node.name- Node namen8n.span.type-"llm","prompt","evaluation", or undefinedn8n.node.input- JSON input (if capture enabled)n8n.node.output- JSON output (if capture enabled)gen_ai.system- AI provider (e.g.,openai,anthropic)gen_ai.request.model- Model name (e.g.,gpt-4)

Check the package is installed:

node -e "console.log(require.resolve('n8n-observability/hooks'))"Expected startup logs:

[otel-setup] OpenTelemetry initialized: my-n8n (OTLP export enabled, langchain (manual), n8n spans only)

[n8n-observability] observability ready and patches applied

| Example | Description |

|---|---|

examples/docker-compose/ |

Production-ready Docker setup with Comet ML |

# Install dependencies

pnpm install

# Build

pnpm build

# Run e2e tests

pnpm e2eMIT - See LICENSE for details.

Note

This project is a fork of LangWatch's n8n-observability. We're grateful for their excellent work in creating the original implementation. We've extended their code to work with all OpenTelemetry providers, making it a universal solution for n8n observability.