A console TUI/CLI to query openAI models

Shell-like interface to send queries to OpenAI models.

It is necessary to enter your API key which can be generated here: https://beta.openai.com/account/api-keys

Just run the script :

$ python askgpt.py

On first use, you will be asked for your API key, then you can define the number max of tokens, the model, the temperature of your request with the commands:

> tokens

> temperature

> model

It is also possible to give argument to command:

> tokens 1024

> temperature 0.6

> model text-curie-001

To define another API key use the api command.

Then just ask your question to the selected model:

text-curie-001> Who is Marie Curie ?

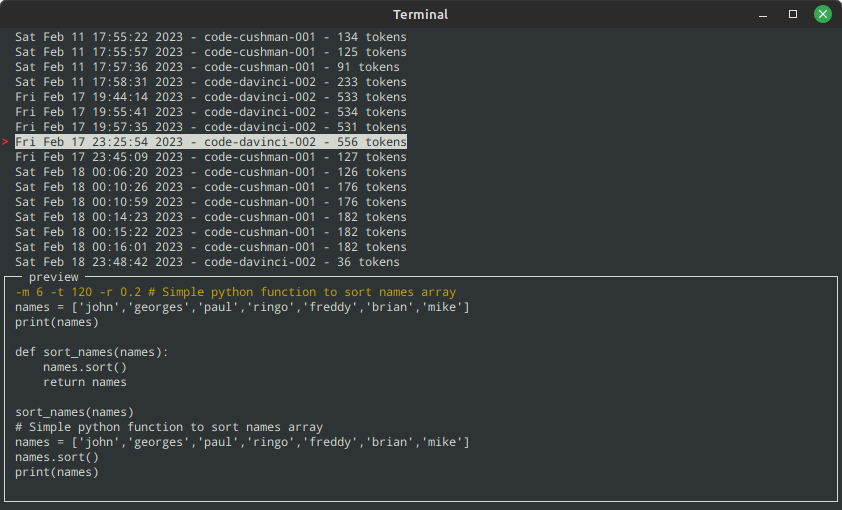

Previous queries and responses are availables suing history command:

In CLI mode, commands and queries are enabled :

$ askgpt.py model text-davinci-003

$ askgpt.py tokens 256

$ askgpt.py info

$ askgpt.py history

$ askgpt.py -t 120 -r 0.2 -m 2 "What is the capital of India ?"

Options are described by invoquing :

$ askgpt.py --help

A log of conversations is saved in a file : ~/askgptlog.json

Configuration is stored in ~/.config/askgpt/config.cfg

- In Termux, add askgpt.py to ~/.shortcuts directory to invoke it from the home widget (using termux-widget)

- To give a file in stdin, use :

$ askgpt.py -m 5 -t 500 -r 0.5 "Find why I got error messages in following script: " $(cat myscript.lua)or$ cat myscript.lua | askgpt.py --model 5 --tokens 500 --temperature 0.5 --stdin "Find why I got error messages in following script: " - Use bat to output response with color and line numbers:

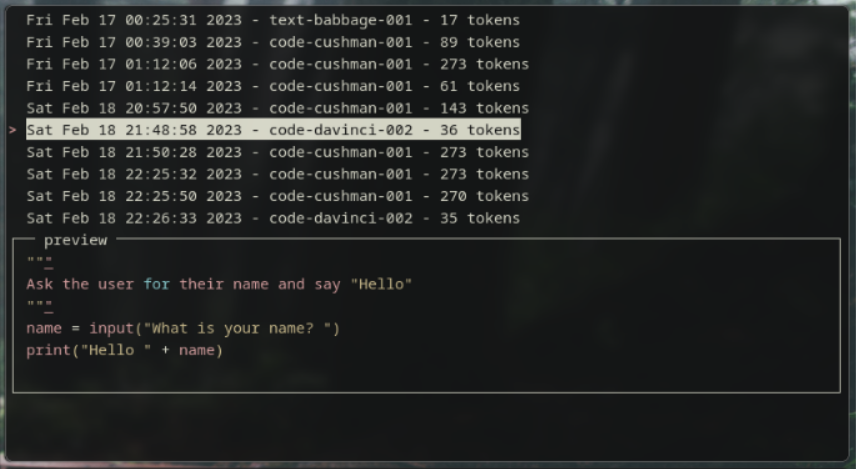

$ askgpt.py -m 5 -t 122 -r 0.2 '"""\nAsk the user for his name and say "Hello"\n"""' | bat -l python

- Python 3

Required modules :

- Json

- Configparser

- requests

- Cmd

- argparse

- sys

- time

Optional modules:

- gnureadline

- termcolor

- simple_term_menu for history display

- pygments for code colorization

Output colorified code Version

- Ouput responses are colorized using pygments module, in queries and history

- Code cleaning

History display version

- Previous queries and responses can be recalled

CLI with options

- Options to repeat prompt at output

- Commands feedback additions

- Colors in help

CLI with options

- Options to send stdin, before sending request

- Bug fix

CLI with options

- Options to change temperature, max_tokens and model directly on the command line, before sending request

- Bug fix (model 0 is not a change)

- Insertion / Edition modes not implemented yet

Colorful GPT shell or CLI

- Shell-like prompt (with recallable commands and queries)

- Touch of colors (optional with termcolor)

- Initial CLI for commands and queries

- Can't recall commands/queries over sessions

- Can't autocomplete models (because of dashes)

- GPT responses are not colorized yet

Initial commit

- Configuration is OK

- requests are doable

- Can't recall last command/query

- Can't arrow-back the query text to edit prompt