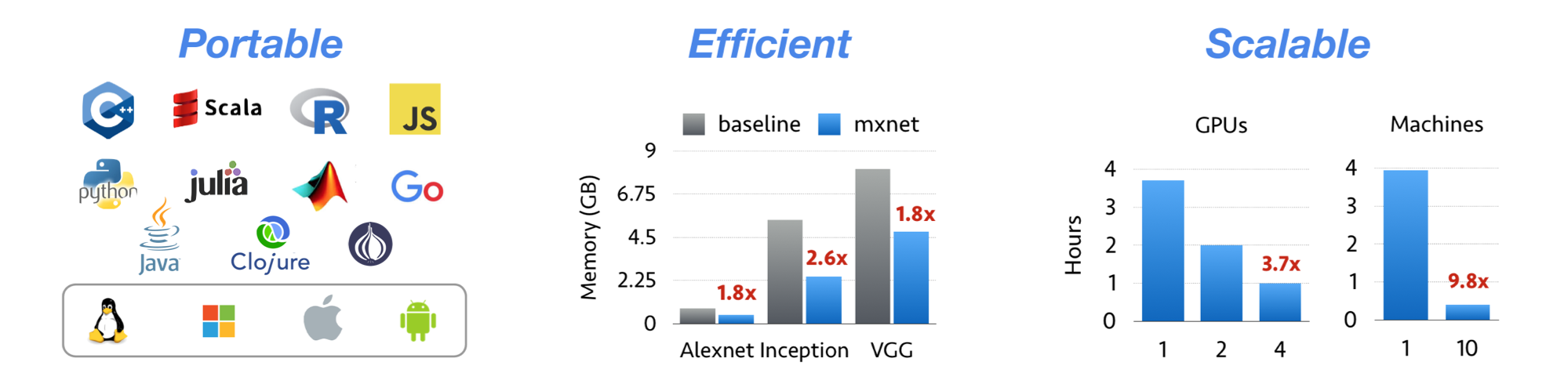

MXNet is a deep learning framework designed for both efficiency and flexibility. It allows you to mix symbolic and imperative programming to maximize efficiency and productivity. At its core, MXNet contains a dynamic dependency scheduler that automatically parallelizes both symbolic and imperative operations on the fly. A graph optimization layer on top of that makes symbolic execution fast and memory efficient. MXNet is portable and lightweight, scaling effectively to multiple GPUs and multiple machines.

MXNet is also more than a deep learning project. It is also a collection of blue prints and guidelines for building deep learning systems, and interesting insights of DL systems for hackers.

- Version 0.10.0 Release - MXNet 0.10.0 Release.

- Version 0.9.3 Release - First 0.9 official release.

- Version 0.9.1 Release (NNVM refactor) - NNVM branch is merged into master now. An official release will be made soon.

- Version 0.8.0 Release

- Updated Image Classification with new Pre-trained Models

- Python Notebooks for How to Use MXNet

- MKLDNN for Faster CPU Performance

- MXNet Memory Monger, Training Deeper Nets with Sublinear Memory Cost

- Tutorial for NVidia GTC 2016

- Embedding Torch layers and functions in MXNet

- MXNet.js: Javascript Package for Deep Learning in Browser (without server)

- Design Note: Design Efficient Deep Learning Data Loading Module

- MXNet on Mobile Device

- Distributed Training

- Guide to Creating New Operators (Layers)

- Go binding for inference

- Amalgamation and Go Binding for Predictors - Outdated

- Training Deep Net on 14 Million Images on A Single Machine

- Documentation and Tutorials

- Design Notes

- Code Examples

- Installation

- Pretrained Models

- Contribute to MXNet

- Frequent Asked Questions

- Design notes providing useful insights that can re-used by other DL projects

- Flexible configuration for arbitrary computation graph

- Mix and match imperative and symbolic programming to maximize flexibility and efficiency

- Lightweight, memory efficient and portable to smart devices

- Scales up to multi GPUs and distributed setting with auto parallelism

- Support for Python, R, Scala, C++ and Julia

- Cloud-friendly and directly compatible with S3, HDFS, and Azure

- Please use mxnet/issues for how to use mxnet and reporting bugs

© Contributors, 2015-2017. Licensed under an Apache-2.0 license.

Tianqi Chen, Mu Li, Yutian Li, Min Lin, Naiyan Wang, Minjie Wang, Tianjun Xiao, Bing Xu, Chiyuan Zhang, and Zheng Zhang. MXNet: A Flexible and Efficient Machine Learning Library for Heterogeneous Distributed Systems. In Neural Information Processing Systems, Workshop on Machine Learning Systems, 2015

MXNet emerged from a collaboration by the authors of cxxnet, minerva, and purine2. The project reflects what we have learned from the past projects. MXNet combines aspects of each of these projects to achieve flexibility, speed, and memory efficiency.