forked from langchain-ai/langchain

-

Notifications

You must be signed in to change notification settings - Fork 0

merge #7

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Merged

Merged

merge #7

Conversation

This file contains hidden or bidirectional Unicode text that may be interpreted or compiled differently than what appears below. To review, open the file in an editor that reveals hidden Unicode characters.

Learn more about bidirectional Unicode characters

Let user inspect the token ids in addition to getting th enumber of tokens --------- Co-authored-by: Zach Schillaci <[email protected]>

Update to pull request #3215 Summary: 1) Improved the sanitization of query (using regex), by removing python command (since gpt-3.5-turbo sometimes assumes python console as a terminal, and runs python command first which causes error). Also sometimes 1 line python codes contain single backticks. 2) Added 7 new test cases. For more details, view the previous pull request. --------- Co-authored-by: Deepak S V <[email protected]>

Co-authored-by: Tomaz Bratanic <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

…instead (#5015) tldr: The docarray [integration PR](#4483) introduced a pinned dependency to protobuf. This is a docarray dependency, not a langchain dependency. Since this is handled by the docarray dependencies, it is unnecessary here. Further, as a pinned dependency, this quickly leads to incompatibilities with application code that consumes the library. Much less with a heavily used library like protobuf. Detail: as we see in the [docarray integration](https://github.com/hwchase17/langchain/pull/4483/files#diff-50c86b7ed8ac2cf95bd48334961bf0530cdc77b5a56f852c5c61b89d735fd711R81-R83), the transitive dependencies of docarray were also listed as langchain dependencies. This is unnecessary as the docarray project has an appropriate [extras](https://github.com/docarray/docarray/blob/a01a05542d17264b8a164bec783633658deeedb8/pyproject.toml#L70). The docarray project also does not require this _pinned_ version of protobuf, rather [a minimum version](https://github.com/docarray/docarray/blob/a01a05542d17264b8a164bec783633658deeedb8/pyproject.toml#L41). So this pinned version was likely in error. To fix this, this PR reverts the explicit hnswlib and protobuf dependencies and adds the hnswlib extras install for docarray (which installs hnswlib and protobuf, as originally intended). Because version `0.32.0` of the docarray hnswlib extras added protobuf, we bump the docarray dependency from `^0.31.0` to `^0.32.0`. # revert docarray explicit transitive dependencies and use extras instead ## Who can review? @dev2049 -- reviewed the original PR @eyurtsev -- bumped the pinned protobuf dependency a few days ago --------- Co-authored-by: Dev 2049 <[email protected]>

This is a highly optimized update to the pull request #3269 Summary: 1) Added ability to MRKL agent to self solve the ValueError(f"Could not parse LLM output: `{llm_output}`") error, whenever llm (especially gpt-3.5-turbo) does not follow the format of MRKL Agent, while returning "Action:" & "Action Input:". 2) The way I am solving this error is by responding back to the llm with the messages "Invalid Format: Missing 'Action:' after 'Thought:'" & "Invalid Format: Missing 'Action Input:' after 'Action:'" whenever Action: and Action Input: are not present in the llm output respectively. For a detailed explanation, look at the previous pull request. New Updates: 1) Since @hwchase17 , requested in the previous PR to communicate the self correction (error) message, using the OutputParserException, I have added new ability to the OutputParserException class to store the observation & previous llm_output in order to communicate it to the next Agent's prompt. This is done, without breaking/modifying any of the functionality OutputParserException previously performs (i.e. OutputParserException can be used in the same way as before, without passing any observation & previous llm_output too). --------- Co-authored-by: Deepak S V <[email protected]>

) # Improve pinecone hybrid search retriever adding metadata support I simply remove the hardwiring of metadata to the existing implementation allowing one to pass `metadatas` attribute to the constructors and in `get_relevant_documents`. I also add one missing pip install to the accompanying notebook (I am not adding dependencies, they were pre-existing). First contribution, just hoping to help, feel free to critique :) my twitter username is `@andreliebschner` While looking at hybrid search I noticed #3043 and #1743. I think the former can be closed as following the example right now (even prior to my improvements) works just fine, the latter I think can be also closed safely, maybe pointing out the relevant classes and example. Should I reply those issues mentioning someone? @dev2049, @hwchase17 --------- Co-authored-by: Andreas Liebschner <[email protected]>

… authentication (#5058) Enhance the code to support SSL authentication for Elasticsearch when using the VectorStore module, as previous versions did not provide this capability. @dev2049 --------- Co-authored-by: caidong <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

…ex (#5059) # Row-wise cosine similarity between two equal-width matrices and return the max top_k score and index, the score all greater than threshold_score. Co-authored-by: Dev 2049 <[email protected]>

…5090) # PowerBI major refinement in working of tool and tweaks in the rest I've gained some experience with more complex sets and the earlier implementation had too many tries by the agent to create DAX, so refactored the code to run the LLM to create dax based on a question and then immediately run the same against the dataset, with retries and a prompt that includes the error for the retry. This works much better! Also did some other refactoring of the inner workings, making things clearer, more concise and faster.

#4933) # fix a bug in the add_texts method of Weaviate vector store that creats wrong embeddings The following is the original code in the `add_texts` method of the Weaviate vector store, from line 131 to 153, which contains a bug. The code here includes some extra explanations in the form of comments and some omissions. ```python for i, doc in enumerate(texts): # some code omitted if self._embedding is not None: # variable texts is a list of string and doc here is just a string. # list(doc) actually breaks up the string into characters. # so, embeddings[0] is just the embedding of the first character embeddings = self._embedding.embed_documents(list(doc)) batch.add_data_object( data_object=data_properties, class_name=self._index_name, uuid=_id, vector=embeddings[0], ) ``` To fix this bug, I pulled the embedding operation out of the for loop and embed all texts at once. Co-authored-by: Shawn91 <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

…5005) # Currently, only the dev images are updated

#5101) `from langchain.experimental.autonomous_agents.autogpt.agent import AutoGPT` results in an import error as AutoGPT is not defined in the __init__.py file https://python.langchain.com/en/latest/use_cases/autonomous_agents/marathon_times.html An Alternate, way would be to be directly update the import statement to be `from langchain.experimental import AutoGPT` Co-authored-by: Dev 2049 <[email protected]>

Added link option in _process_response <!-- In _process_respons "snippet" provided non working links for the case that "links" had the correct answer. Thus added an elif statement before snippet --> <!-- Remove if not applicable --> Fixes # (issue) In _process_response link provided correct answers while the snippet reply provided non working links @vowelparrot ## Before submitting <!-- If you're adding a new integration, include an integration test and an example notebook showing its use! --> ## Who can review? Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested: <!-- For a quicker response, figure out the right person to tag with @ @hwchase17 - project lead Tracing / Callbacks - @agola11 Async - @agola11 DataLoaders - @eyurtsev Models - @hwchase17 - @agola11 Agents / Tools / Toolkits - @vowelparrot VectorStores / Retrievers / Memory - @dev2049 --> --------- Co-authored-by: Dev 2049 <[email protected]>

# changed ValueError to ImportError Code cleaning. Fixed inconsistencies in ImportError handling. Sometimes it raises ImportError and sometime ValueError. I've changed all cases to the `raise ImportError` Also: - added installation instruction in the error message, where it missed; - fixed several installation instructions in the error message; - fixed several error handling in regards to the ImportError

…5045) # Assign `current_time` to `datetime.now()` if it `current_time is None` in `time_weighted_retriever` Fixes #4825 As implemented, `add_documents` in `TimeWeightedVectorStoreRetriever` assigns `doc.metadata["last_accessed_at"]` and `doc.metadata["created_at"]` to `datetime.datetime.now()` if `current_time` is not in `kwargs`. ```python def add_documents(self, documents: List[Document], **kwargs: Any) -> List[str]: """Add documents to vectorstore.""" current_time = kwargs.get("current_time", datetime.datetime.now()) # Avoid mutating input documents dup_docs = [deepcopy(d) for d in documents] for i, doc in enumerate(dup_docs): if "last_accessed_at" not in doc.metadata: doc.metadata["last_accessed_at"] = current_time if "created_at" not in doc.metadata: doc.metadata["created_at"] = current_time doc.metadata["buffer_idx"] = len(self.memory_stream) + i self.memory_stream.extend(dup_docs) return self.vectorstore.add_documents(dup_docs, **kwargs) ``` However, from the way `add_documents` is being called from `GenerativeAgentMemory`, `current_time` is set as a `kwarg`, but it is given a value of `None`: ```python def add_memory( self, memory_content: str, now: Optional[datetime] = None ) -> List[str]: """Add an observation or memory to the agent's memory.""" importance_score = self._score_memory_importance(memory_content) self.aggregate_importance += importance_score document = Document( page_content=memory_content, metadata={"importance": importance_score} ) result = self.memory_retriever.add_documents([document], current_time=now) ``` The default of `now` was set in #4658 to be None. The proposed fix is the following: ```python def add_documents(self, documents: List[Document], **kwargs: Any) -> List[str]: """Add documents to vectorstore.""" current_time = kwargs.get("current_time", datetime.datetime.now()) # `current_time` may exist in kwargs, but may still have the value of None. if current_time is None: current_time = datetime.datetime.now() ``` Alternatively, we could just set the default of `now` to be `datetime.datetime.now()` everywhere instead. Thoughts @hwchase17? If we still want to keep the default to be `None`, then this PR should fix the above issue. If we want to set the default to be `datetime.datetime.now()` instead, I can update this PR with that alternative fix. EDIT: seems like from #5018 it looks like we would prefer to keep the default to be `None`, in which case this PR should fix the error.

# Add Mastodon toots loader. Loader works either with public toots, or Mastodon app credentials. Toot text and user info is loaded. I've also added integration test for this new loader as it works with public data, and a notebook with example output run now. --------- Co-authored-by: Dev 2049 <[email protected]>

OpenLM is a zero-dependency OpenAI-compatible LLM provider that can call different inference endpoints directly via HTTP. It implements the OpenAI Completion class so that it can be used as a drop-in replacement for the OpenAI API. This changeset utilizes BaseOpenAI for minimal added code. --------- Co-authored-by: Dev 2049 <[email protected]>

Pass dataset name by name

Implementation is similar to search_distance and where_filter # adds 'additional' support to Weaviate queries Co-authored-by: Dev 2049 <[email protected]>

# Improve TextSplitter.split_documents, collect page_content and metadata in one iteration ## Who can review? Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested: @eyurtsev In the case where documents is a generator that can only be iterated once making this change is a huge help. Otherwise a silent issue happens where metadata is empty for all documents when documents is a generator. So we expand the argument from `List[Document]` to `Union[Iterable[Document], Sequence[Document]]` --------- Co-authored-by: Steven Tartakovsky <[email protected]>

# Add a WhyLabs callback handler * Adds a simple WhyLabsCallbackHandler * Add required dependencies as optional * protect against missing modules with imports * Add docs/ecosystem basic example based on initial prototype from @andrewelizondo > this integration gathers privacy preserving telemetry on text with whylogs and sends stastical profiles to WhyLabs platform to monitoring these metrics over time. For more information on what WhyLabs is see: https://whylabs.ai After you run the notebook (if you have env variables set for the API Keys, org_id and dataset_id) you get something like this in WhyLabs:  Co-authored-by: Andre Elizondo <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

#5012) # Add AzureCognitiveServicesToolkit to call Azure Cognitive Services API: achieve some multimodal capabilities This PR adds a toolkit named AzureCognitiveServicesToolkit which bundles the following tools: - AzureCogsImageAnalysisTool: calls Azure Cognitive Services image analysis API to extract caption, objects, tags, and text from images. - AzureCogsFormRecognizerTool: calls Azure Cognitive Services form recognizer API to extract text, tables, and key-value pairs from documents. - AzureCogsSpeech2TextTool: calls Azure Cognitive Services speech to text API to transcribe speech to text. - AzureCogsText2SpeechTool: calls Azure Cognitive Services text to speech API to synthesize text to speech. This toolkit can be used to process image, document, and audio inputs. --------- Co-authored-by: Dev 2049 <[email protected]>

# Add link to Psychic from document loaders documentation page In my previous PR I forgot to update `document_loaders.rst` to link to `psychic.ipynb` to make it discoverable from the main documentation.

…an_input_llm.ipynb (#5118) # Fix typo + add wikipedia package installation part in human_input_llm.ipynb This PR 1. Fixes typo ("the the human input LLM"), 2. Addes wikipedia package installation part (in accordance with `WikipediaQueryRun` [documentation](https://python.langchain.com/en/latest/modules/agents/tools/examples/wikipedia.html)) in `human_input_llm.ipynb` (`docs/modules/models/llms/examples/human_input_llm.ipynb`)

Some LLM's will produce numbered lists with leading whitespace, i.e. in response to "What is the sum of 2 and 3?": ``` Plan: 1. Add 2 and 3. 2. Given the above steps taken, please respond to the users original question. ``` This commit updates the PlanningOutputParser regex to ignore leading whitespace before the step number, enabling it to correctly parse this format.

…sticsearch models (#3401) This PR introduces a new module, `elasticsearch_embeddings.py`, which provides a wrapper around Elasticsearch embedding models. The new ElasticsearchEmbeddings class allows users to generate embeddings for documents and query texts using a [model deployed in an Elasticsearch cluster](https://www.elastic.co/guide/en/machine-learning/current/ml-nlp-model-ref.html#ml-nlp-model-ref-text-embedding). ### Main features: 1. The ElasticsearchEmbeddings class initializes with an Elasticsearch connection object and a model_id, providing an interface to interact with the Elasticsearch ML client through [infer_trained_model](https://elasticsearch-py.readthedocs.io/en/v8.7.0/api.html?highlight=trained%20model%20infer#elasticsearch.client.MlClient.infer_trained_model) . 2. The `embed_documents()` method generates embeddings for a list of documents, and the `embed_query()` method generates an embedding for a single query text. 3. The class supports custom input text field names in case the deployed model expects a different field name than the default `text_field`. 4. The implementation is compatible with any model deployed in Elasticsearch that generates embeddings as output. ### Benefits: 1. Simplifies the process of generating embeddings using Elasticsearch models. 2. Provides a clean and intuitive interface to interact with the Elasticsearch ML client. 3. Allows users to easily integrate Elasticsearch-generated embeddings. Related issue #3400 --------- Co-authored-by: Dev 2049 <[email protected]>

Co-authored-by: Tyler Hutcherson <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

# Reuse `length_func` in `MapReduceDocumentsChain` Pretty straightforward refactor in `MapReduceDocumentsChain`. Reusing the local variable `length_func`, instead of the longer alternative `self.combine_document_chain.prompt_length`. @hwchase17

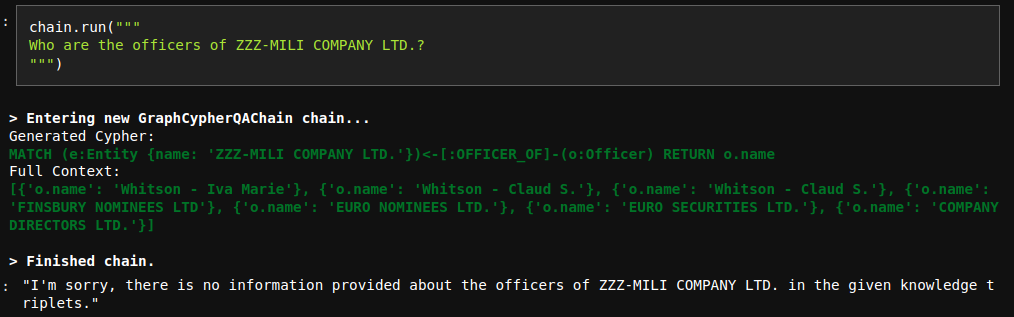

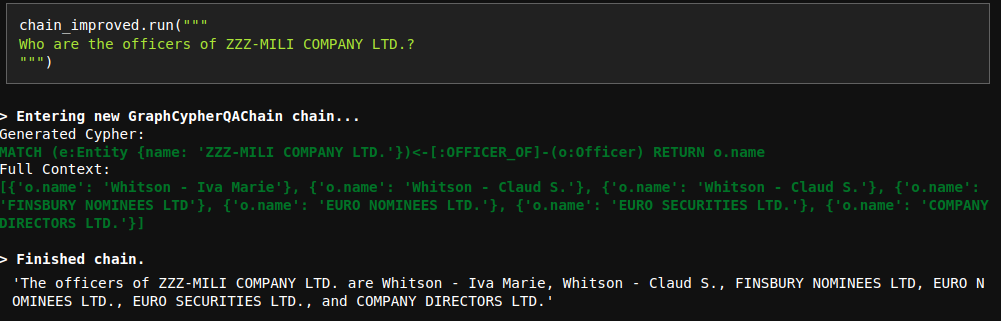

# Improve Cypher QA prompt The current QA prompt is optimized for networkX answer generation, which returns all the possible triples. However, Cypher search is a bit more focused and doesn't necessary return all the context information. Due to that reason, the model sometimes refuses to generate an answer even though the information is provided:  To fix this issue, I have updated the prompt. Interestingly, I tried many variations with less instructions and they didn't work properly. However, the current fix works nicely.

# Improve weaviate vectorstore docs

Co-authored-by: vempaliakhil96 <[email protected]>

Co-authored-by: Dev 2049 <[email protected]>

# OpanAI finetuned model giving zero tokens cost Very simple fix to the previously committed solution to allowing finetuned Openai models. Improves #5127 --------- Co-authored-by: Dev 2049 <[email protected]>

`vectorstore.PGVector`: The transactional boundary should be increased to cover the query itself Currently, within the `similarity_search_with_score_by_vector` the transactional boundary (created via the `Session` call) does not include the select query being made. This can result in un-intended consequences when interacting with the PGVector instance methods directly --------- Co-authored-by: Dev 2049 <[email protected]>

# Output parsing variation allowance for self-ask with search This change makes self-ask with search easier for Llama models to follow, as they tend toward returning 'Followup:' instead of 'Follow up:' despite an otherwise valid remaining output. Co-authored-by: Dev 2049 <[email protected]>

## Description The html structure of readthedocs can differ. Currently, the html tag is hardcoded in the reader, and unable to fit into some cases. This pr includes the following changes: 1. Replace `find_all` with `find` because we just want one tag. 2. Provide `custom_html_tag` to the loader. 3. Add tests for readthedoc loader 4. Refactor code ## Issues See more in #2609. The problem was not completely fixed in that pr. --------- Signed-off-by: byhsu <[email protected]> Co-authored-by: byhsu <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

Create IUGU loader --------- Co-authored-by: Dev 2049 <[email protected]>

# Add Joplin document loader [Joplin](https://joplinapp.org/) is an open source note-taking app. Joplin has a [REST API](https://joplinapp.org/api/references/rest_api/) for accessing its local database. The proposed `JoplinLoader` uses the API to retrieve all notes in the database and their metadata. Joplin needs to be installed and running locally, and an access token is required. - The PR includes an integration test. - The PR includes an example notebook. --------- Co-authored-by: Dev 2049 <[email protected]>

Changes debug log to warning log when LC Tracer fails to instantiate

Example: ``` $ langchain plus start --expose ... $ langchain plus status The LangChainPlus server is currently running. Service Status Published Ports langchain-backend Up 40 seconds 1984 langchain-db Up 41 seconds 5433 langchain-frontend Up 40 seconds 80 ngrok Up 41 seconds 4040 To connect, set the following environment variables in your LangChain application: LANGCHAIN_TRACING_V2=true LANGCHAIN_ENDPOINT=https://5cef-70-23-89-158.ngrok.io $ langchain plus stop $ langchain plus status The LangChainPlus server is not running. $ langchain plus start The LangChainPlus server is currently running. Service Status Published Ports langchain-backend Up 5 seconds 1984 langchain-db Up 6 seconds 5433 langchain-frontend Up 5 seconds 80 To connect, set the following environment variables in your LangChain application: LANGCHAIN_TRACING_V2=true LANGCHAIN_ENDPOINT=http://localhost:1984 ```

Co-authored-by: Leonid Kuligin <[email protected]> Co-authored-by: Leonid Kuligin <[email protected]> Co-authored-by: sasha-gitg <[email protected]> Co-authored-by: Justin Flick <[email protected]> Co-authored-by: Justin Flick <[email protected]>

# fix a mistake in concepts.md ## Who can review? Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested:

Copies `GraphIndexCreator.from_text()` to make an async version called `GraphIndexCreator.afrom_text()`. This is (should be) a trivial change: it just adds a copy of `GraphIndexCreator.from_text()` which is async and awaits a call to `chain.apredict()` instead of `chain.predict()`. There is no unit test for GraphIndexCreator, and I did not create one, but this code works for me locally. @agola11 @hwchase17

I found an API key for `serpapi_api_key` while reading the docs. It seems to have been modified very recently. Removed it in this PR @hwchase17 - project lead

…issue #5104) (#5220) # Change Default GoogleDriveLoader Behavior to not Load Trashed Files (issue #5104) Fixes #5104 If the previous behavior of loading files that used to live in the folder, but are now trashed, you can use the `load_trashed_files` parameter: ``` loader = GoogleDriveLoader( folder_id="1yucgL9WGgWZdM1TOuKkeghlPizuzMYb5", recursive=False, load_trashed_files=True ) ``` As not loading trashed files should be expected behavior, should we 1. even provide the `load_trashed_files` parameter? 2. add documentation? Feels most users will stick with default behavior ## Who can review? Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested: DataLoaders - @eyurtsev Twitter: [@nicholasliu77](https://twitter.com/nicholasliu77)

# Allow to specify ID when adding to the FAISS vectorstore This change allows unique IDs to be specified when adding documents / embeddings to a faiss vectorstore. - This reflects the current approach with the chroma vectorstore. - It allows rejection of inserts on duplicate IDs - will allow deletion / update by searching on deterministic ID (such as a hash). - If not specified, a random UUID is generated (as per previous behaviour, so non-breaking). This commit fixes #5065 and #3896 and should fix #2699 indirectly. I've tested adding and merging. Kindly tagging @Xmaster6y @dev2049 for review. --------- Co-authored-by: Ati Sharma <[email protected]> Co-authored-by: Harrison Chase <[email protected]>

# Bibtex integration Wrap bibtexparser to retrieve a list of docs from a bibtex file. * Get the metadata from the bibtex entries * `page_content` get from the local pdf referenced in the `file` field of the bibtex entry using `pymupdf` * If no valid pdf file, `page_content` set to the `abstract` field of the bibtex entry * Support Zotero flavour using regex to get the file path * Added usage example in `docs/modules/indexes/document_loaders/examples/bibtex.ipynb` --------- Co-authored-by: Sébastien M. Popoff <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

- Add support for MiniMax embeddings Doc: [MiniMax embeddings](https://api.minimax.chat/document/guides/embeddings?id=6464722084cdc277dfaa966a) --------- Co-authored-by: Archon <[email protected]> Co-authored-by: Dev 2049 <[email protected]>

# Add QnA with sources example <!-- Thank you for contributing to LangChain! Your PR will appear in our next release under the title you set. Please make sure it highlights your valuable contribution. Replace this with a description of the change, the issue it fixes (if applicable), and relevant context. List any dependencies required for this change. After you're done, someone will review your PR. They may suggest improvements. If no one reviews your PR within a few days, feel free to @-mention the same people again, as notifications can get lost. --> <!-- Remove if not applicable --> Fixes: see https://stackoverflow.com/questions/76207160/langchain-doesnt-work-with-weaviate-vector-database-getting-valueerror/76210017#76210017 ## Before submitting <!-- If you're adding a new integration, include an integration test and an example notebook showing its use! --> ## Who can review? Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested: <!-- For a quicker response, figure out the right person to tag with @ @hwchase17 - project lead Tracing / Callbacks - @agola11 Async - @agola11 DataLoaders - @eyurtsev Models - @hwchase17 - @agola11 Agents / Tools / Toolkits - @vowelparrot VectorStores / Retrievers / Memory - @dev2049 --> @dev2049

#5232) remove extra "\n" to ensure that the format of the description, example, and prompt&generation are completely consistent.

# Resolve error in StructuredOutputParser docs

Documentation for `StructuredOutputParser` currently not reproducible,

that is, `output_parser.parse(output)` raises an error because the LLM

returns a response with an invalid format

```python

_input = prompt.format_prompt(question="what's the capital of france")

output = model(_input.to_string())

output

# ?

#

# ```json

# {

# "answer": "Paris",

# "source": "https://www.worldatlas.com/articles/what-is-the-capital-of-france.html"

# }

# ```

```

Was fixed by adding a question mark to the prompt

Sign up for free

to join this conversation on GitHub.

Already have an account?

Sign in to comment

Add this suggestion to a batch that can be applied as a single commit.

This suggestion is invalid because no changes were made to the code.

Suggestions cannot be applied while the pull request is closed.

Suggestions cannot be applied while viewing a subset of changes.

Only one suggestion per line can be applied in a batch.

Add this suggestion to a batch that can be applied as a single commit.

Applying suggestions on deleted lines is not supported.

You must change the existing code in this line in order to create a valid suggestion.

Outdated suggestions cannot be applied.

This suggestion has been applied or marked resolved.

Suggestions cannot be applied from pending reviews.

Suggestions cannot be applied on multi-line comments.

Suggestions cannot be applied while the pull request is queued to merge.

Suggestion cannot be applied right now. Please check back later.

Your PR Title (What it does)

Fixes # (issue)

Before submitting

Who can review?

Community members can review the PR once tests pass. Tag maintainers/contributors who might be interested: